Getting Started with Channels and Events

Target audience: Developers & Modelers

Introduction

In this how-to we'll have a look at getting started with channels and events. This article is intended for people who are taking their first steps with Flowable channels and event models. We'll implement a simple BPMN process model that sends an event through an outbound channel and a CMMN case model that receives an event on an inbound channel. Such channels are often implemented with messaging solutions.

In this article, we'll look at various implementations:

- Internal channel (Available in Flowable Orchestrate and Work)

- Kafka (Available in Flowable Orchestrate and Work)

- RabbitMQ (Available in Flowable Orchestrate and Work)

- ActiveMQ or (JMS in general) (Available in Flowable Orchestrate and Work)

- AWS SQS (Available in Flowable Work Only)

- AWS SNS (Available in Flowable Work Only)

There is also an Email channel implementation (available in Flowable Work only), which is out of scope for this how-to. Please checkout the receive email how-to.

The internal, Kafka, RabbitMQ and JMS implementation are available in Flowable Open Source too. The modeling experience in Flowable Design however with regards to event data mapping and channel model editing is not available in Flowable Open Source.

Once you've familiarized yourself with these basics, you can continue with an advanced guide that describes the various concepts and configurations with regards to channel and events model in detail.

Example Models

Flowable ships with constructs for BPMN and CMMN models for sending and receiving events (see the full list here). Suffice for this article is to know that for process and/or case models it is possible to send or receive data - called events - over a channel.

Such events have a certain data structure, which is captured in an event model. Channels are implemented using a channel model.

As will become clear in the subsequent sections, Flowable makes an important distinction between the data, represented by an event model and the way the data is transported from and to the outside world - the channel model.

That way, BPMN process or CMMN case models never have implementation details in them. They work only with the event, without having to worry about how events are actually send, received, data is mapped, data is transformed or other such details.

The Event Model

For this basic example, we'll assume a customer event that contains the following data:

- Customer id

- Customer name

- Customer description

- A flag indicating whether the customer is active

Create a new app model and give it a name and key. In the App model editor, click Add model and select Create a new model for the app > Other > Event. Give the new event model the name and key customer.

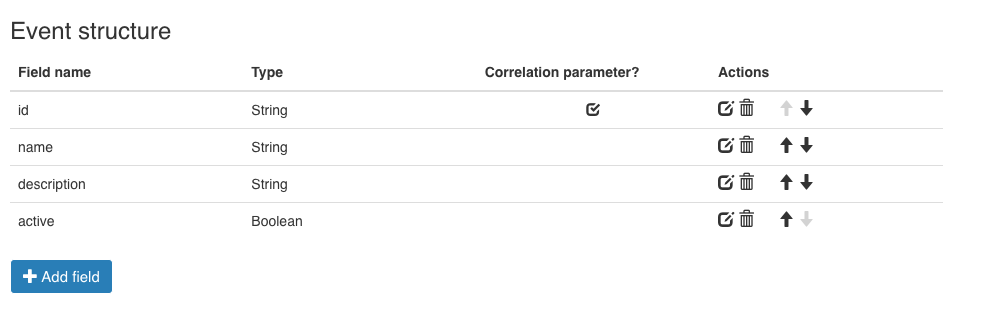

Add the fields from above to the event model. The end result should look like this:

In the screenshot above, the customer id is marked as a correlation parameter. Correlation is an important concept to associate existing process or case instances with incoming events. This article will not use correlation. If you want to learn more about it, read more about correlation here.

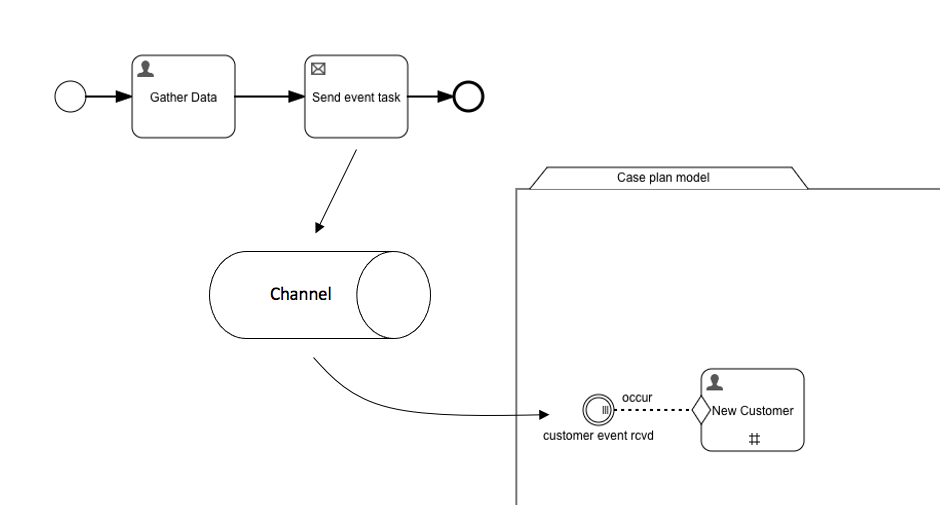

The typical problem with these kind of examples (and switching messaging solution implementations like we do here) is that each messaging solution has their own way of creating, sending and receiving messages. To avoid this, we'll do everything with Flowable models: we'll create a BPMN process model that sends an event based on the data from a user task form and a CMMN case that receives that event. It doesn't take much imagination to see how this would work in a wider architecture where the event might be sent from another system.

The BPMN Model

Let's create the BPMN process model first.

Go back to the app editor and click Add model again. Now add a process model by selecting Create a new model for the app > Process.

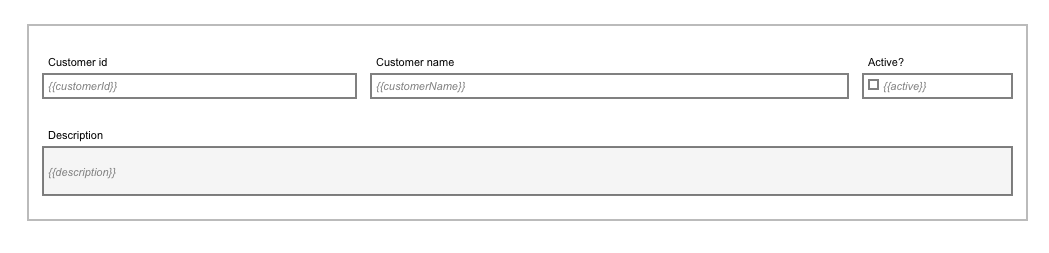

Drag a User task and a Send event task to the canvas and connect them. For the user task, create a new form to capture the information we want to put in the event:

- A textfield with label

Customer idbound to the value{{customerId}}. - A textfield with label

Customer namebound to the value{{customerName}}. - A checkbox with label

Activebound to the value{{active}}. - A textarea with label

Descriptionbound to the value{{description}}.

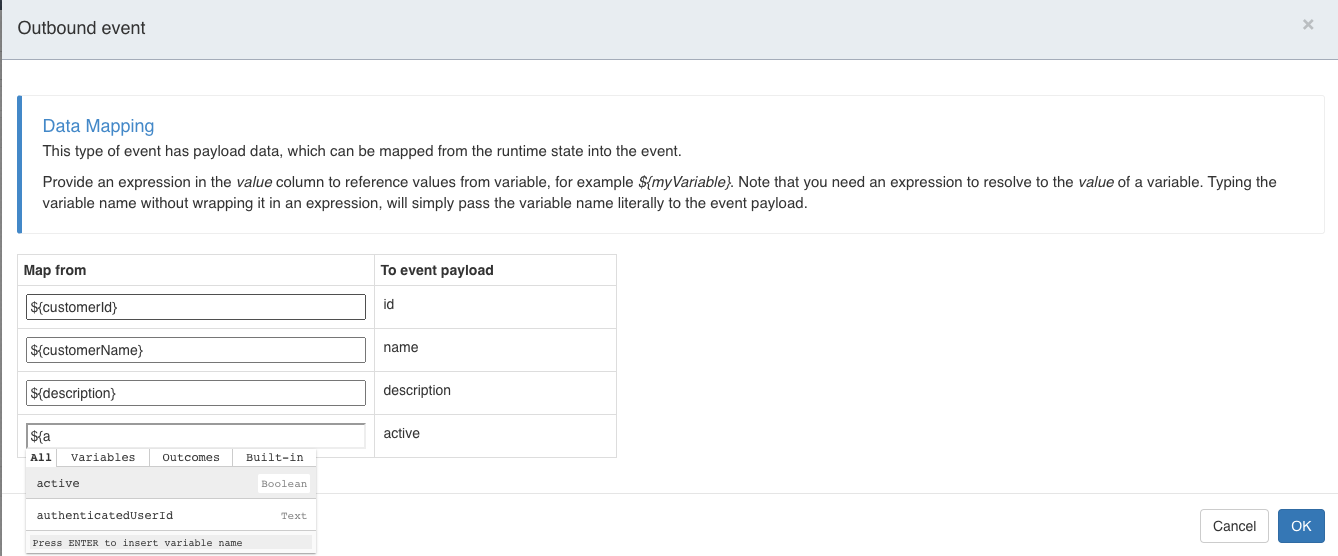

We now have a way to capture data. The next step is to map this data to the event we want to send out. Select the Send event task. Click the Outbound event property, click Reference next and select the Customer event. This links this task with the even model we created above.

A new property No outbound event configuration has now appeared. Clicking it will open up a configuration window. In this window, we need to map the process variables into the event data structure.

Map the form fields to the event. You'll notice that when you type ${ (i.e. the start of a back-end expression), that the variable assistant will make this work easy:

That's it for the process model. We'll fill in the channel properties in the subsequent sections.

The CMMN Model

Let's now create the CMMN case model.

Go back to the app editor and click Add model again. Now add a CMMN case model by selecting Create a new model for the app > Case.

Drag an Event listener and a Human task to the canvas and connect them, making sure the task gets an entry sentry. Check the Repetition on the event listener and the human task.

In this scenario, we will start one case instance and receive multiple events (which is why the Repetition flag is checked). Each new event will create a new task in the same case instance.

Alternatively, the event could be used to create a new case instance by selecting the plan model and configuring the inbound event there. Instead of having one case instance receiving all events, there would be one instance for each event that is received.

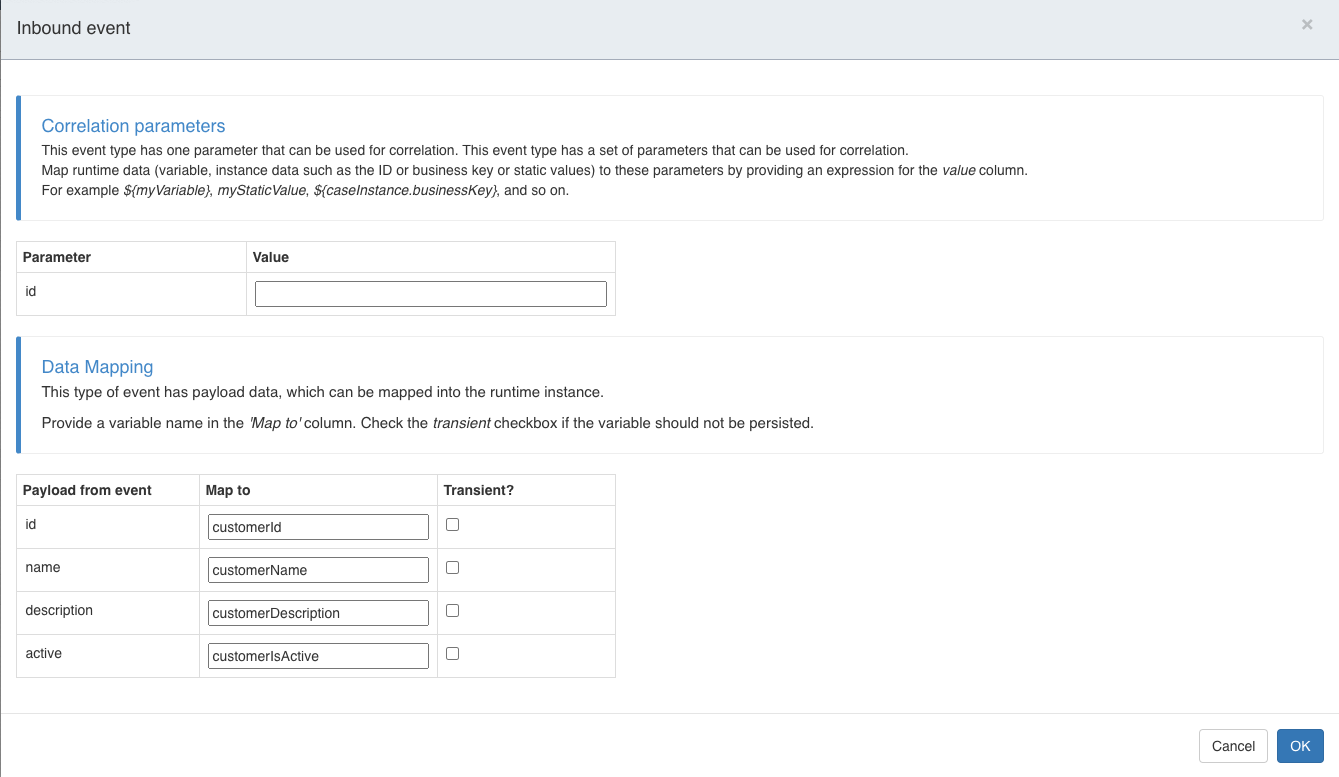

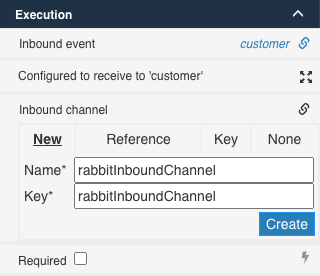

Click the Inbound event on the event listener, click Reference next and select the Customer event. The same event model is shared between the BPMN and CMMN model.

A new property No inbound event configuration has now appeared. Clicking it will open up a configuration window. In this window, we need to map the incoming event data into case variables:

The correlation is not used here and can be left empty. Read more about correlation here.

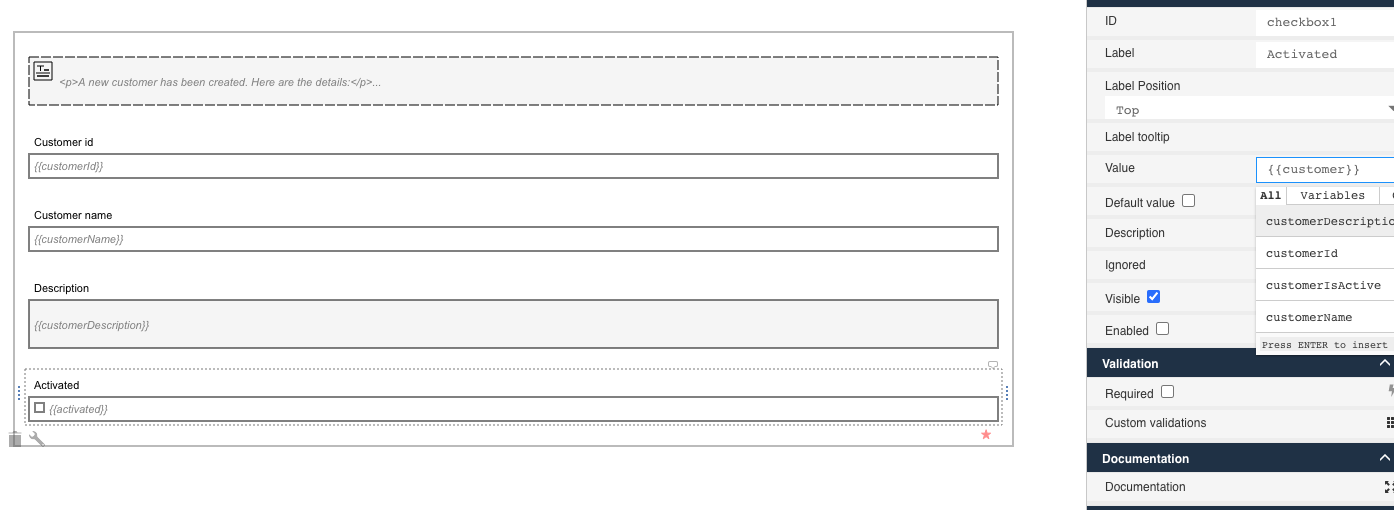

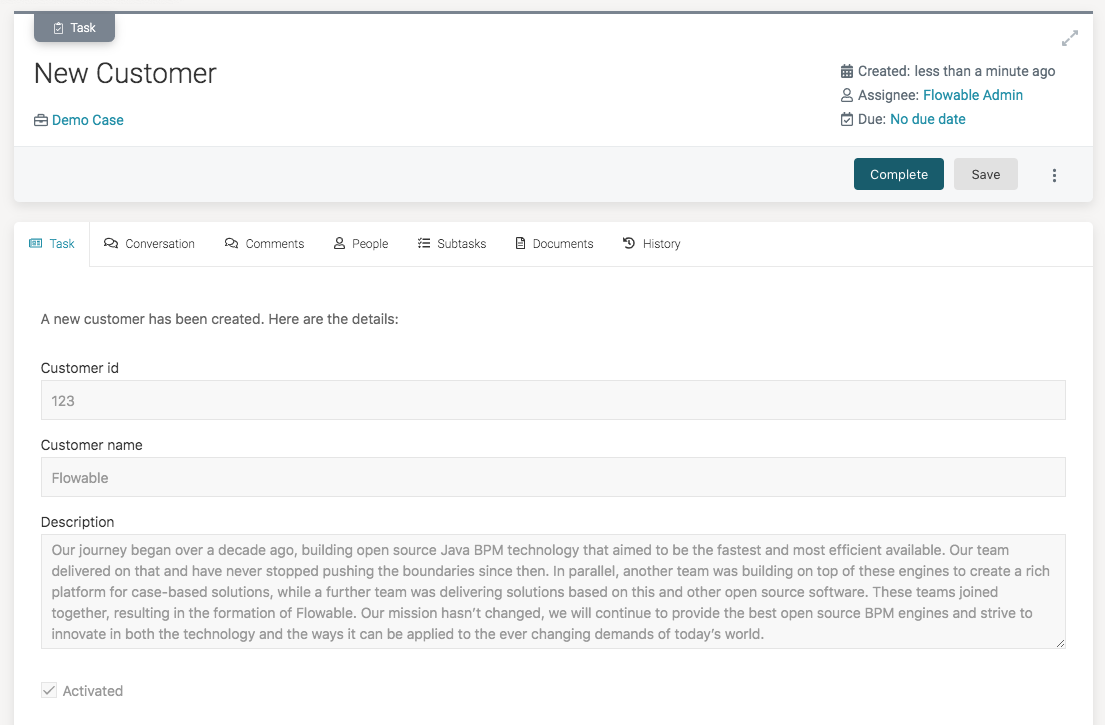

For the task, create a new form to capture the information we want to put in the event, using the same variable names as we've used in the event mapping. As shown in the screenshot below, the variable assistant will suggest the correct variables when binding the value:

- A textfield with label

Customer idbound to the value{{customerId}}. - A textfield with label

Customer namebound to the value{{customerName}}. - A checkbox with label

Activebound to the value{{activated}}. - A textarea with label

Descriptionbound to the value{{customerDescription}}.

All the value bindings correspond with what we've set in the event mapping above.

That's it for the case model. We'll fill in the channel properties in the subsequent sections.

Up Next

We've now created a process model of which instances capture data in a form, map that data into an event and send the event outwards.

We've created a case model of which instances receive the event, map it into case variables and show it on a form.

The missing piece is how the event is actually transported. In this guide, we'll look at the following alternatives:

Note that you can either pick a specific implementation or you can swap channel models in each section and work through the guide sequentially, showing the flexibility of the channel model abstraction that Flowable uses.

Channel model versioning work different from other models. Read about it here.

A functioning app zip (using the internal channel as described below) that can be imported can be downloaded here.

Internal Channel Implementation

This Internal Channel implementation is the most straight-foward implementation of them all and can be used when no third-party messaging solution is required or wanted.

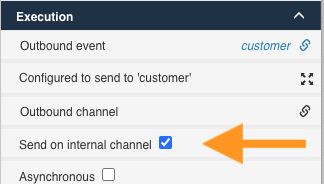

Open the BPMN process model created earlier and select the Send event task. In the property panel check the Send on internal channel flag:

That's all that's needed. There's no need to select anything in the case model, the Flowable engine is smart enough to know it's coming from the internal channel.

Deploy the app model now.

- Start a case instance, as in this setup we'll capture all events in the same case instance.

- Now start a process instance, select the

Gather Datatask, fill in the form and click onComplete.

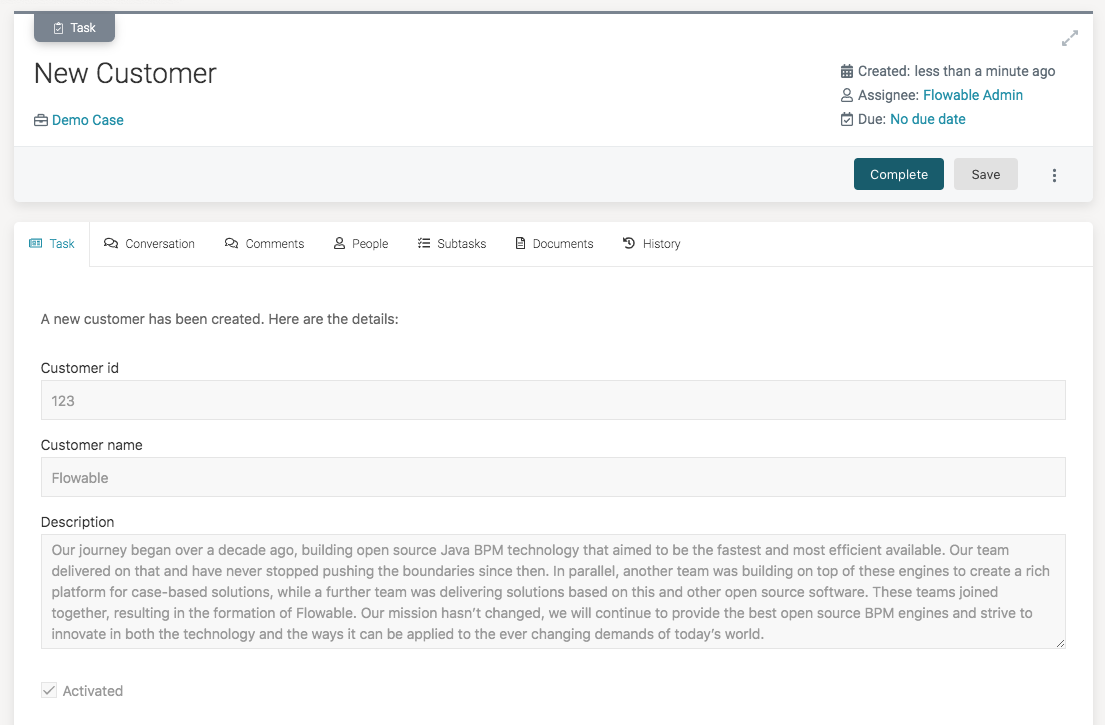

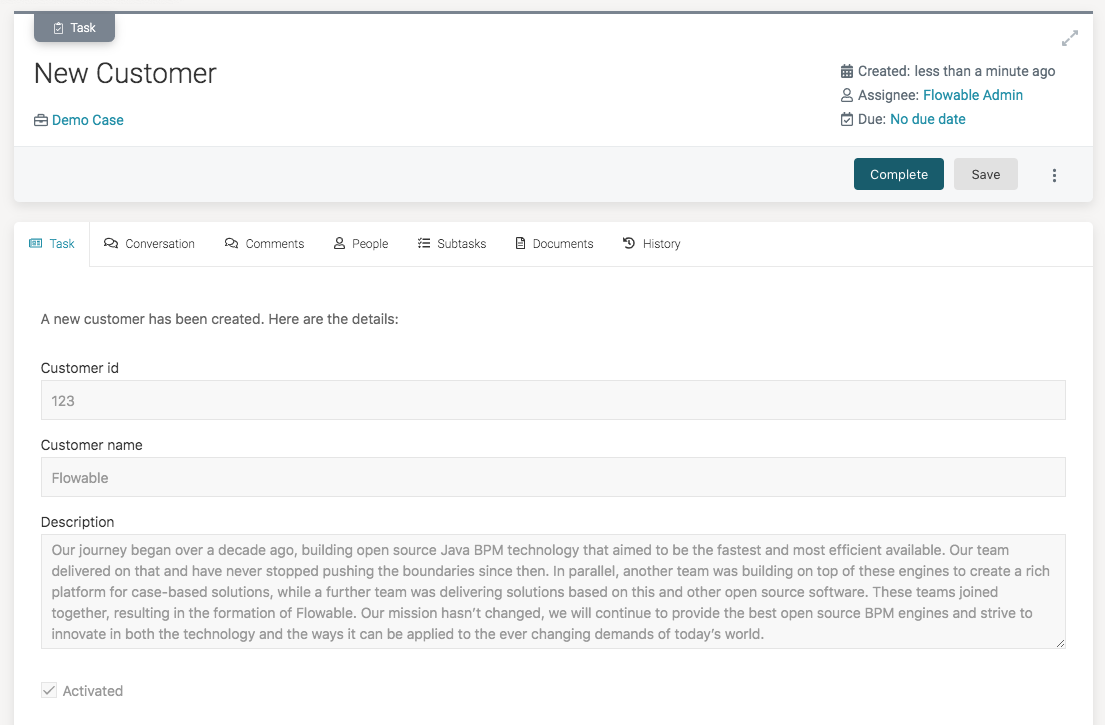

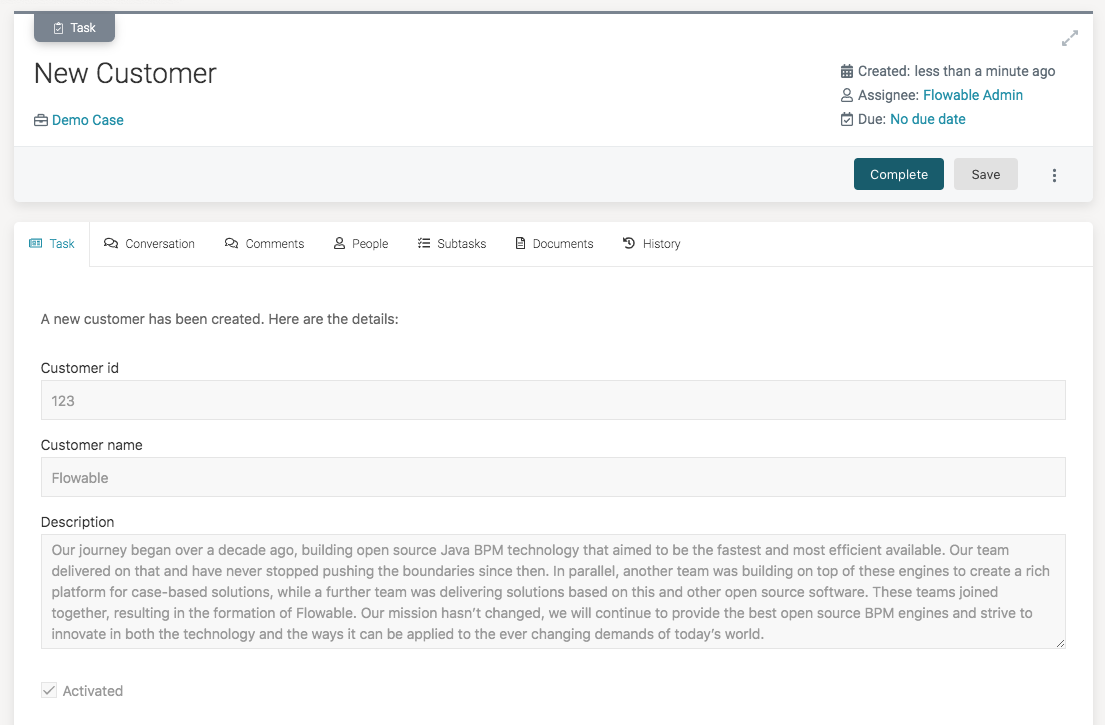

Now go to the case instance. You'll see that a new task has been created. When going into its details, you can see the event data has correctly been passed through the internal channel and is now displayed in the form:

Kafka Implementation

The Kafka integration is by default disabled. To enable it, following properties need to be set in the application.properties configuration file (changing the server url if needed):

application.kafka-enabled=true

spring.kafka.bootstrap-servers=localhost:9092

The Kafka dependencies are by default added when using Flowable Work/Engage. No need to do anything in that case.

However, if you're building your own Spring Boot project with Flowable dependencies, you'll need to add the spring-kafka dependency to your pom.xml (which will transitively pull in all necessary dependencies):

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

To be able to follow along here, you'll need a Kafka instance with a customers topic. If you don't know how to do this, follow the instructions below:

If you want to follow along with the instructions here, you'll need a working Kafka installation.

The following steps will set up a simple one-node Kafka instance (do not use this in production):

- Download the latest version from the Apache Kafka website

- Unzip the downloaded zip file.

- Open a terminal window and go to the unzipped folder. Execute

bin/zookeeper-server-start.sh config/zookeeper.propertiesto boot up Zookeeper (which Kafka uses for clustering nodes - also needed when running a single node). - Open another terminal window in the same folder. Execute

bin/kafka-server-start.sh config/server.propertiesto boot up a Kafka instance. - Open another terminal window in the same folder. Execute

bin/kafka-topics.sh --create --bootstrap-server localhost:9092 --replication-factor 1 --partitions 1 --topic customersto create a simple topic namedcustomers. This topic is where we'll post events to and from.

Full installation instructions can be found on the Apache Kafka website

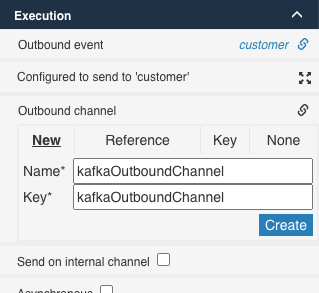

Open the BPMN process model created earlier and select the Send event task. Click the Outbound channel property, select new and type kafkaOutboundChannel as name. Then click the Create button:

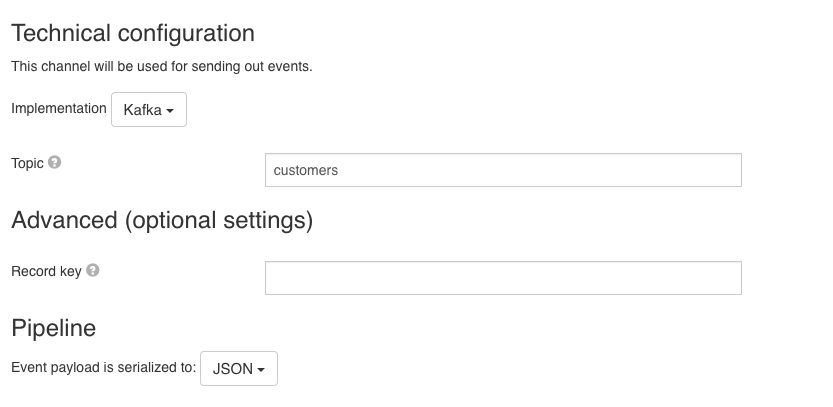

The channel model editor will now open. To configure our outbound channel, we need to only set two things:

- The first dropdown needs to be set to

Outbound. - The Topic needs to be set to

customers(if you followed the installation instructions, we've created it in step 5).

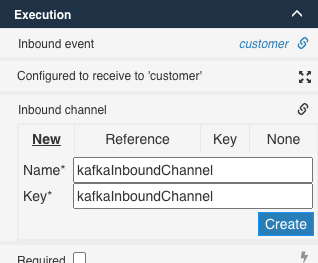

Save both models. Now go to the case model and select the event listener. Click the Inbound channel property, select new and type kafkaInboundChannel as name. Then click the Create button:

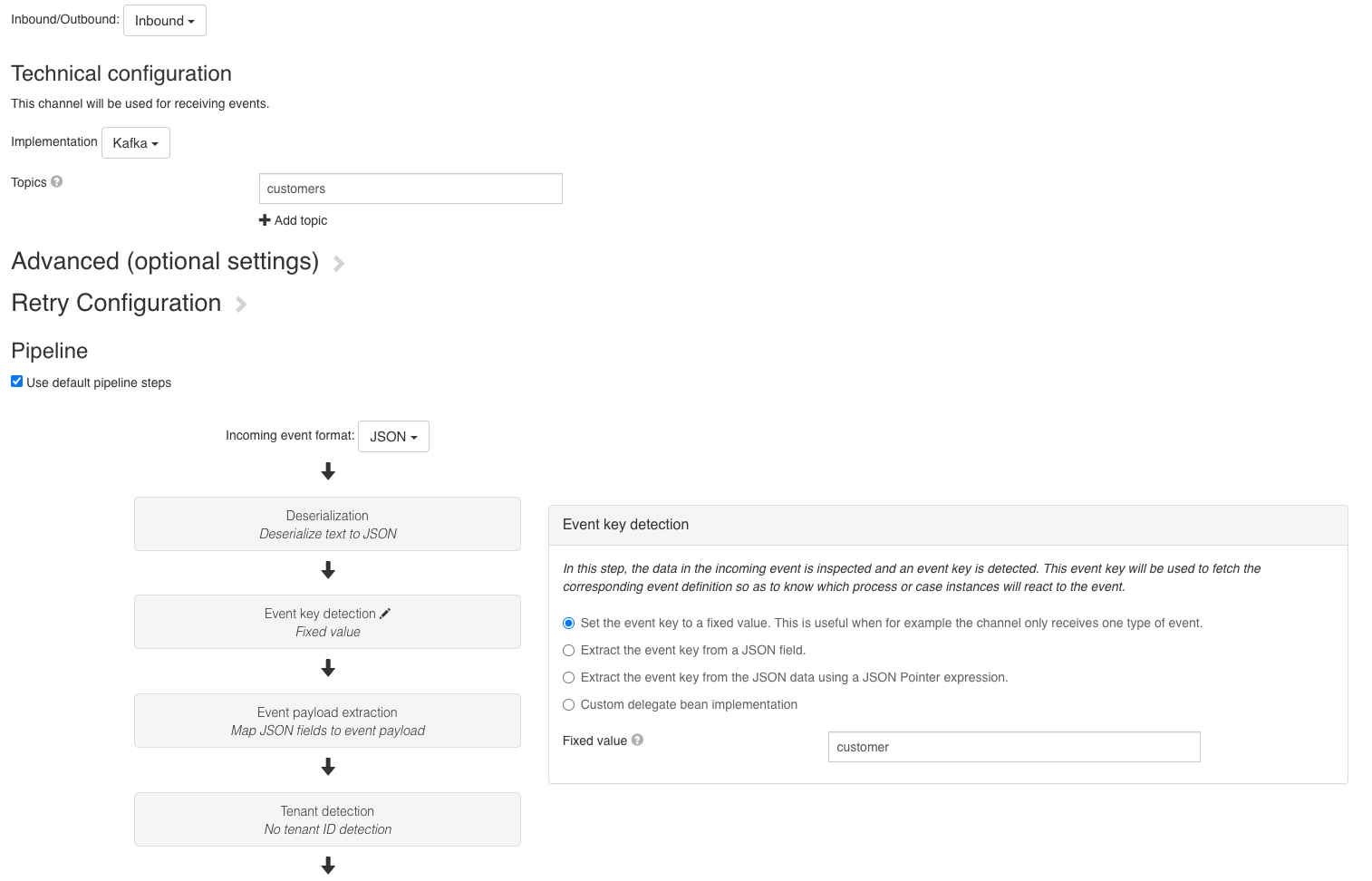

The channel model editor will now open. To configure our inbound channel, following settings need to be configured:

- The topic needs to be set to

customers, as we're listening to the same topic as we're sending to. - In the

Pipelinesection, select theEvent key detectionstep. In theFixed valuefield, typecustomer. This configures the incoming channel pipeline to mark each event coming in with the event keycustomer(which is the key we used before for the event model). We can leave all other steps of the pipeline as-is, the defaults are ok (it assumes JSON as payload format and a direct mapping from the json to the event fields with the same name).

If you want to learn more about all configuration options and the various steps of the channel pipeline, head towards the advanced guide on channel and event models

Deploy the app model now.

- Start a case instance, as in this setup we'll capture all events in the same case instance.

- Now start a process instance, select the

Gather Datatask, fill in the form and click theCompletebutton.

Now go to the case instance. You'll see that a new task has been created. When going into its details, you can see the event data has correctly been sent to the kafka topic and has been received:

Checking if the message has been passed over the Kafka topic can be done with following command in the terminal:

bin/kafka-run-class.sh kafka.tools.GetOffsetShell --broker-list localhost:9092 --topic customers

Which returns the number of consumed messages: customers:0:1

When starting multiple process instances, this number will go up:

bin/kafka-run-class.sh kafka.tools.GetOffsetShell --broker-list localhost:9092 --topic customers

customers:0:4

RabbitMQ Implementation

The RabbitMQ integration is by default disabled. To enable it, following properties need to be set in the application.properties configuration file (changing the server url and credentials if needed):

application.rabbit-enabled=true

spring.rabbitmq.addresses=localhost:5679

spring.rabbitmq.username=guest

spring.rabbitmq.password=guest

The RabbitMQ dependencies are by default added when using Flowable Work/Engage. No need to do anything in that case.

However, if you're building your own Spring Boot project with Flowable dependencies, you'll need to add the spring-boot-starter-amqp (AMPQ the protocol used to communicate with RabbitMQ) dependency to your pom.xml:

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-amqp</artifactId>

</dependency>

To be able to follow along here, you'll need a RabbitMQ instance with a customers queue. If you don't know how to do this, follow the instructions below:

If you want to follow along with the instructions here, you'll need a working RabbitMq installation.

The following steps will set up a simple one-node RabbitMQ instance (do not use this in production):

- Boot up a RabbitMQ instance using docker:

docker run -it --rm --name rabbitmq -p 5679:5672 -p 15672:15672 rabbitmq:3-management. Note that we're not using the default port here (5672), as this is also the port for ActiveMQ which is by default enabled for the Flowable Trial and would otherwise clash. - Go to the management web interface with a browser on http://localhost:15672/. Log in with

guest/guest. - Click the

Queuestab. Then clickAdd a new queueand fill incustomersas name. Finally, clickAdd queue.

Alternative installation details can be found on the RabbitMQ website

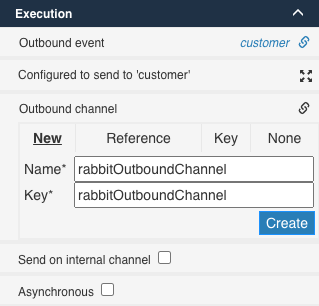

Open the BPMN process model created earlier and select the Send event task. Click the Outbound channel property, select new and type rabbitOutboundChannel as name. Then click the Create button:

The channel model editor will now open. To configure our outbound channel, we need to only set two things:

- The first dropdown needs to be set to

Outbound. - The

Implementationfield needs to be set toRabbitMQ. - The

Routing keyneeds to be set tocustomers(if you followed the installation instructions, we've created the queue in step 3).

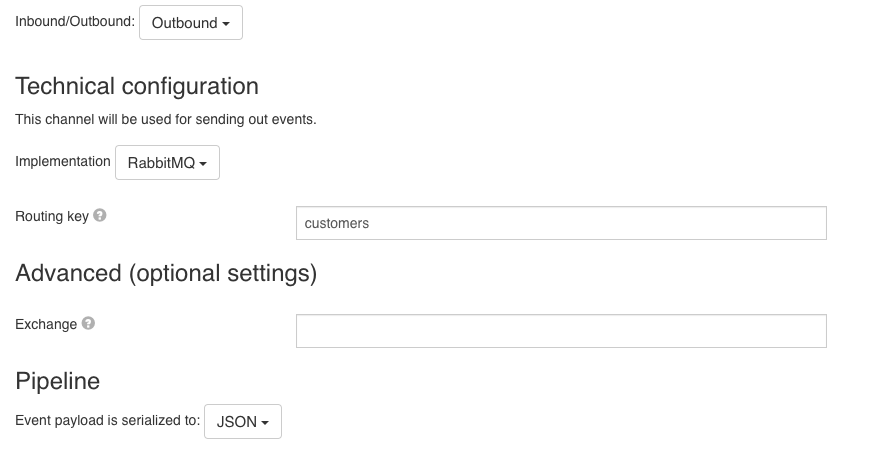

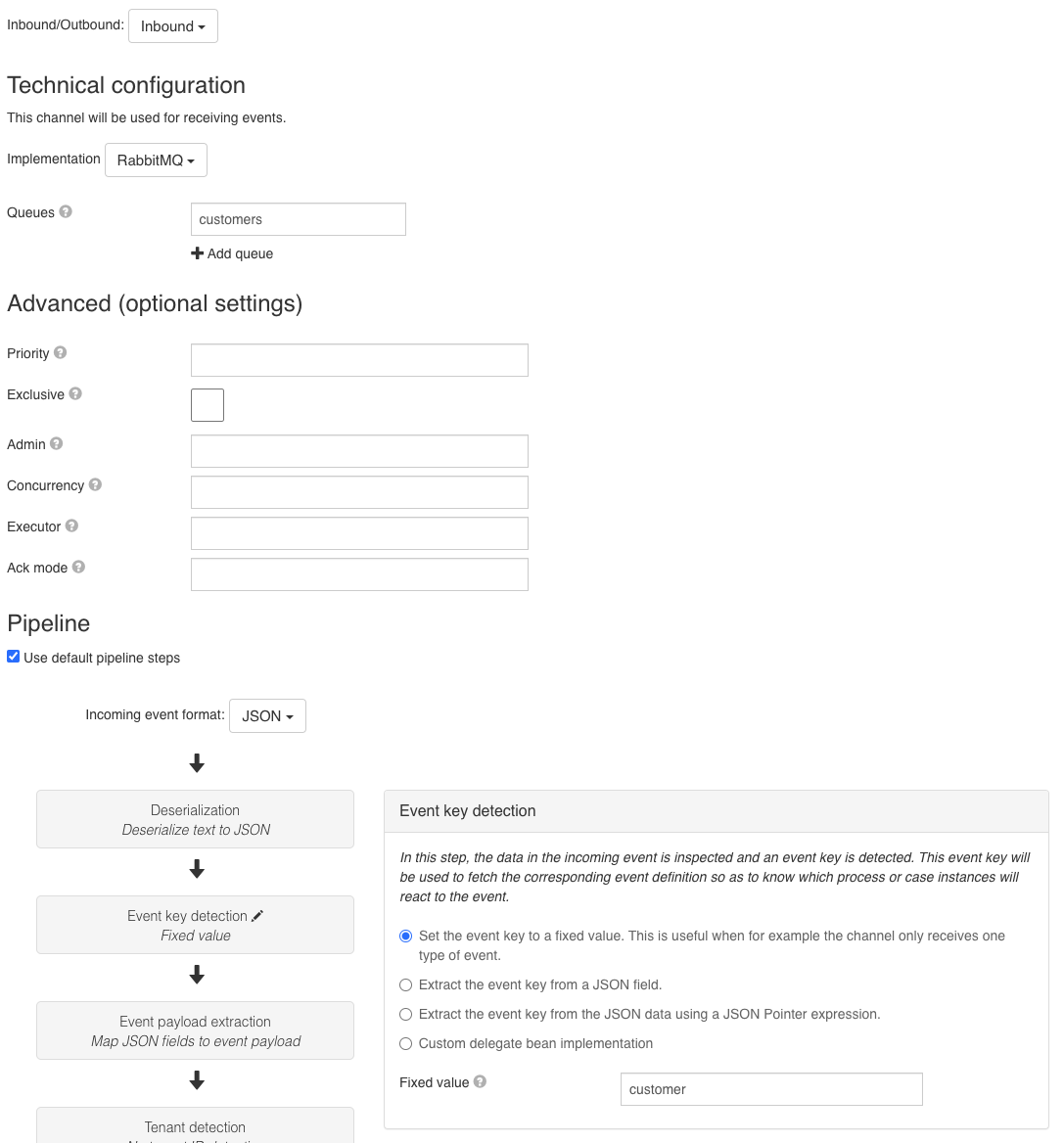

Save both models. Now go to the case model and select the event listener. Click the Inbound channel property, select new and type rabbitInboundChannel as name. Then click the Create button:

The channel model editor will now open. To configure our inbound channel, following settings need to be configured:

- The

Implementationfield needs to be set toRabbitMQ. - The

Queuesfield needs to be set tocustomers, as we're listening to the same queue as we're sending to. - In the

Pipelinesection, select theEvent key detectionstep. In theFixed valuefield, typecustomer. This configures the incoming channel pipeline to mark each event coming in with the event keycustomer(which is the key we used before for the event model). We can leave all other steps of the pipeline as-is, the defaults are ok (it assumes JSON as payload format and a direct mapping from the json to the event fields with the same name).

If you want to learn more about all configuration options and the various steps of the channel pipeline, head towards the advanced guide on channel and event models

Deploy the app model now.

- Start a case instance, as in this setup we'll capture all events in the same case instance.

- Now start a process instance, select the

Gather Datatask, fill in the form and click theCompletebutton.

Now go to the case instance. You'll see that a new task has been created. When going into its details, you can see the event data has correctly been sent to the queue and has been received:

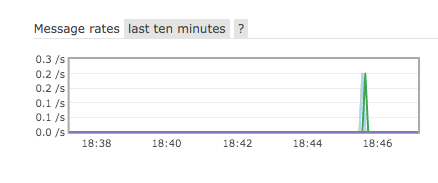

Checking if the message has been passed over the RabbitMQ queue can be verified in the management web UI, when going to the customers queue details page:

ActiveMQ implementation

This section explains the ActiveMQ setup, however it is applicable for all JMS compatible messaging products (switching the properties and dependencies as needed).

The ActiveMQ integration is by default disabled. To enable it, following properties need to be set in the application.properties configuration file (changing the server url and credentials if needed):

application.jms-enabled=true

spring.activemq.broker-url=tcp://localhost:61616

The ActiveMQ dependencies are by default added when using Flowable Work/Engage. No need to do anything in that case.

However, if you're building your own Spring Boot project with Flowable dependencies, you'll need to add the spring-starter-activemq dependency to your pom.xml:

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-activemq</artifactId>

</dependency>

To be able to follow along here, you'll need an ActiveMQ instance. Queues are created automatically, so no need to create a queue.

If you want to follow along with the instructions here, you'll need a working ActiveMQ instance.

The following steps will set up a simple one-node ActiveMQ instance (do not use this in production):

- Download the latest release from the the Apache ActiveMQ download page

- Unzip the downloaded file. Open a terminal and go to the

binfolder in the unzipped folder. - Execute

./activemq console(or alternative when not on a unix system)

Alternative installation details can be found on the Apache ActiveMQ website

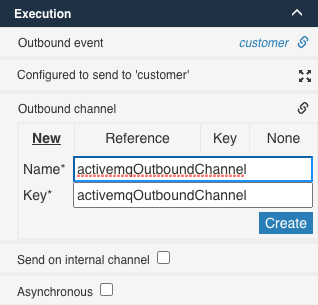

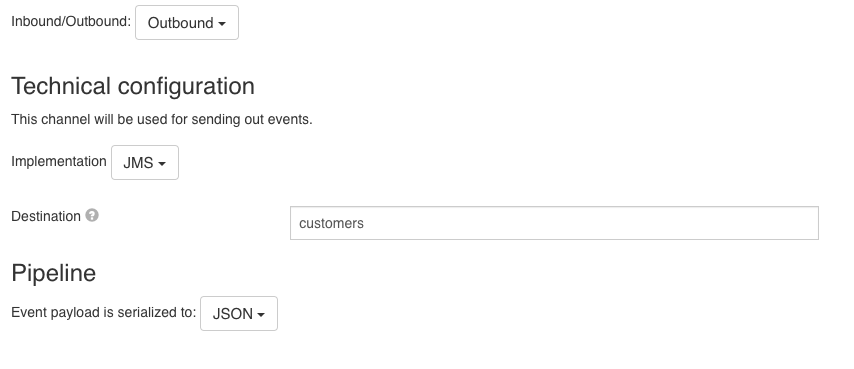

Open the BPMN process model created earlier and select the Send event task. Click the Outbound channel property, select new and type activemqOutboundChannel as name. Then click the Create button:

The channel model editor will now open. To configure our outbound channel, we need to only set two things:

- The first dropdown needs to be set to

Outbound. - The

Implementationfield needs to be set toJMS. - The

Destinationneeds to be set tocustomers

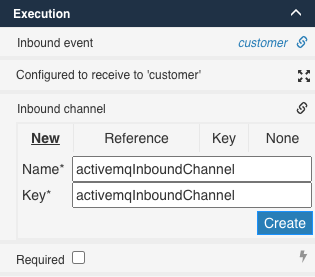

Save both models. Now go to the case model and select the event listener. Click the Inbound channel property, select new and type activemqInboundChannel as name. Then click the Create button:

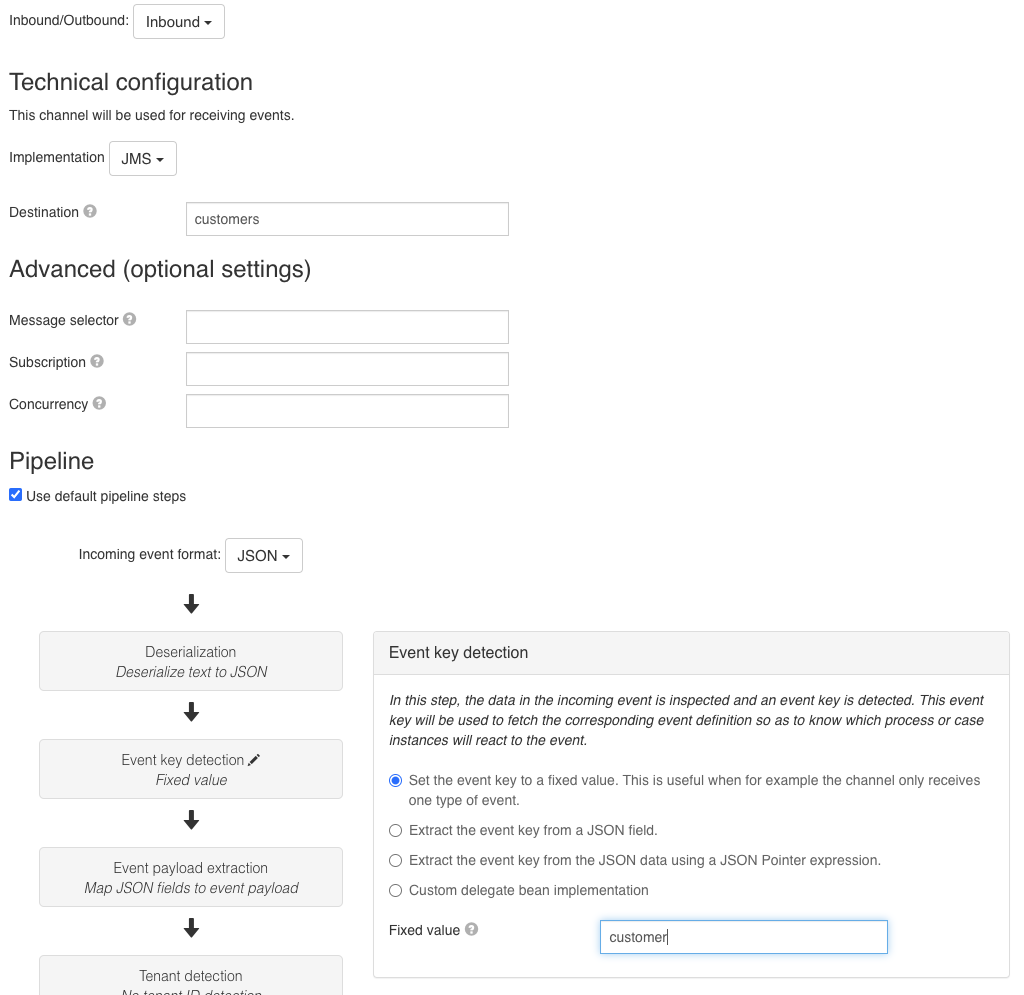

The channel model editor will now open. To configure our inbound channel, following settings need to be configured:

- The

Implementationfield needs to be set toJMS. - The

Destinationfield needs to be set tocustomers, as we're listening to the same queue as we're sending to. - In the

Pipelinesection, select theEvent key detectionstep. In theFixed valuefield, typecustomer. This configures the incoming channel pipeline to mark each event coming in with the event keycustomer(which is the key we used before for the event model). We can leave all other steps of the pipeline as-is, the defaults are ok (it assumes JSON as payload format and a direct mapping from the json to the event fields with the same name).

If you want to learn more about all configuration options and the various steps of the channel pipeline, head towards the advanced guide on channel and event models

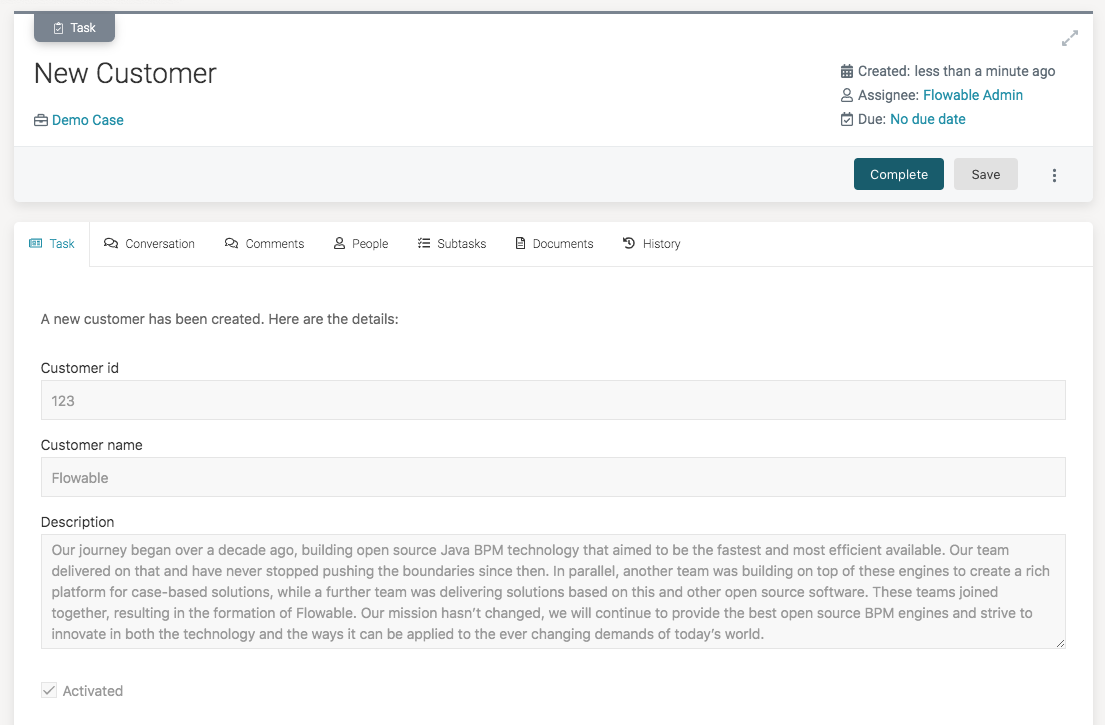

Deploy the app model now.

- Start a case instance, as in this setup we'll capture all events in the same case instance.

- Now start a process instance, select the

Gather Datatask, fill in the form and click theCompletebutton.

Now go to the case instance. You'll see that a new task has been created. When going into its details, you can see the event data has correctly been sent to the queue and has been received:

AWS SQS Implementation

The AWS SQS (Simple Queue Service) integration is by default disabled. To enable it, following properties need to be set in the application.properties configuration file:

application.aws-sqs-enabled=true

flowable.aws.region=eu-central-1

The region property needs to be set to the correct AWS region where the SQS queue is running. The correct value can be retrieved from the AWS management console.

The AWS SQS dependencies are by default added when using Flowable Work/Engage. No need to do anything in that case.

However, if you're building your own Spring Boot project with Flowable dependencies, you'll need to add the flowable-spring-boot-starter-aws-sqs dependency to your pom.xml:

<dependency>

<groupId>com.flowable.core</groupId>

<artifactId>flowable-spring-boot-starter-aws-sqs</artifactId>

</dependency>

To be able to follow along here, you'll need a SQS instance.

Creating a SQS queue on AWS:

First of all, you'll need an AWS account for this. Create an account or log in through the AWS Management console.

To be able to connect to the SQS queue, you'll need credentials to connect to it. There are various ways of accomplishing this. Here we'll follow the best practice (at the time of writing) to use an IAM user with only SQS permissions, an access key id and a secret access key specific to the IAM user.

After logging into the AWS Management console, select your username dropdown at the top-right and click on My Security Credentials.

From those screens, create a new group with SQS permissions only. Then create a new user and associate the group with it. Copy the access key access key id and a secret access key or generate one if none exists.

On your local system, create a new folder .aws in your user home. Create a file named credentials in it with following content, replacing the placeholders below with the actual values:

[default]

aws_access_key_id=<access_key_id_value>

aws_secret_access_key=<secret_access_key_value>

In the AWS Management console, go to or search for SQS. On the next screen, click the Create queue button:

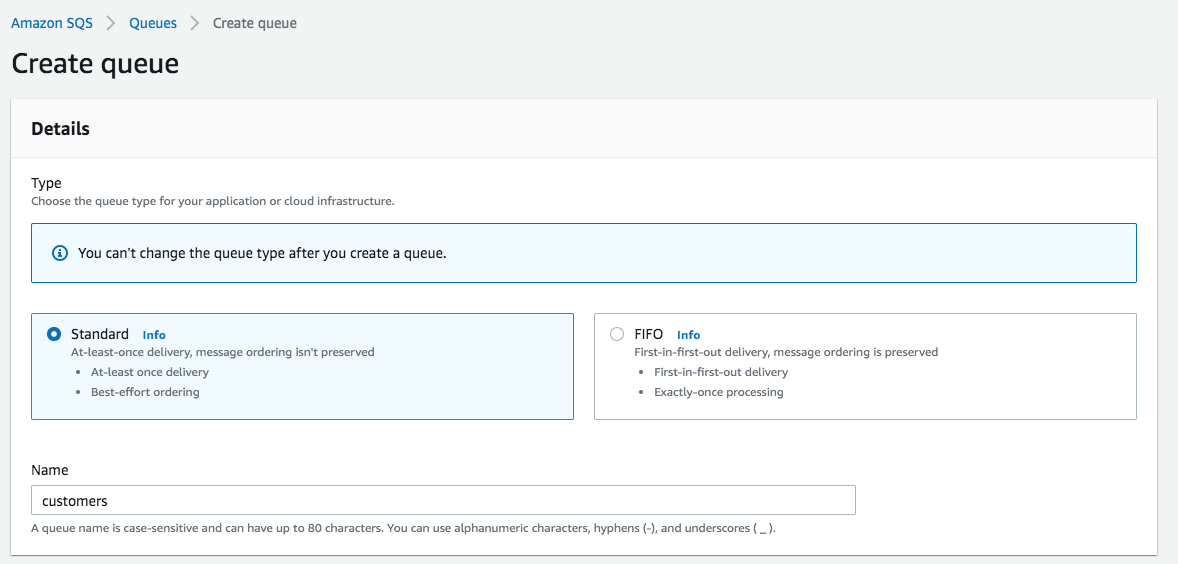

On the next screen, set the name to customers:

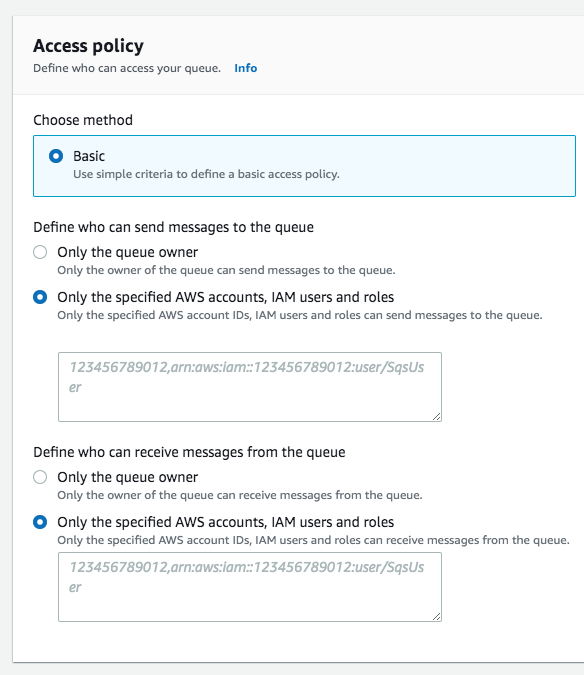

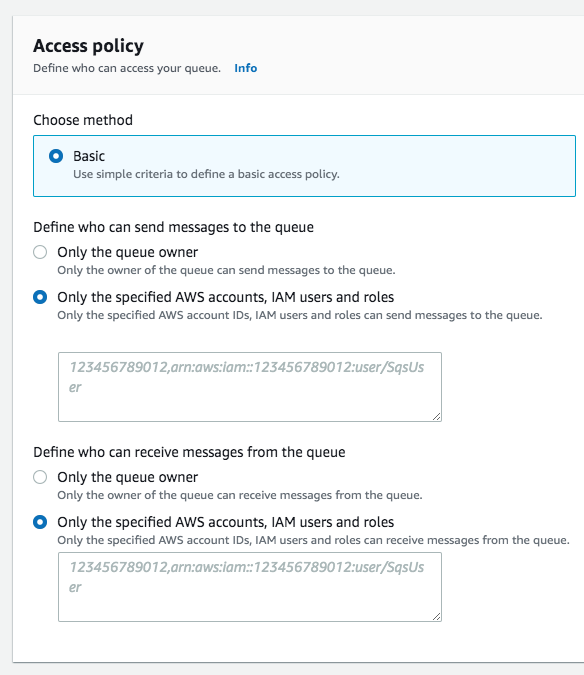

For the Access Policy, select twice the Only the specified AWS accounts, IAM users and roles option and copy paste the arn:... value from the IAM user created above:

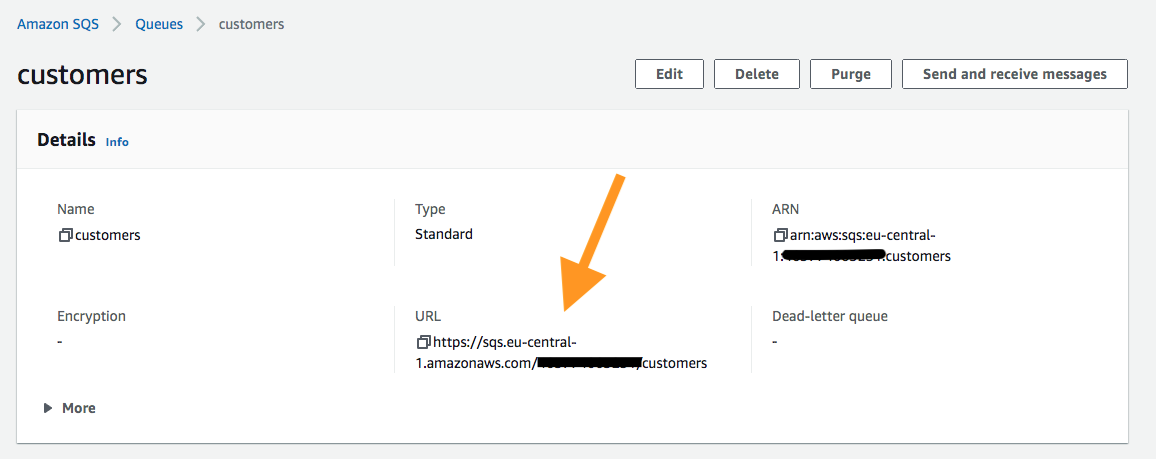

Finally, click Create queue at the bottom of the page. This will create the queue and give it a unique URL. Copy this url, as we'll use it in the channel model below:

SNS and SQS channel implementations share the same user. So provide the user with the least permissions as possible.

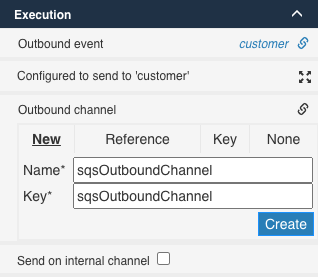

Open the BPMN process model created earlier and select the Send event task. Click the Outbound channel property, select new and type sqsOutboundChannel as name. Then click the Create button:

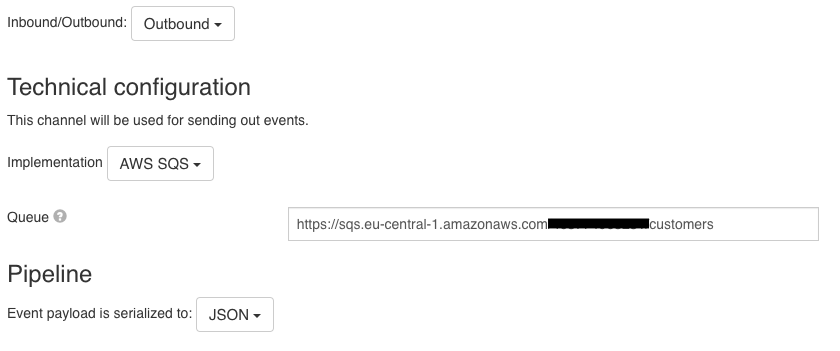

The channel model editor will now open. To configure our outbound channel, we need to only set two things:

- The first dropdown needs to be set to

Outbound. - The

Implementationfield needs to be set toAWS SQS. - The

Queueneeds to be set to the SQS URL.

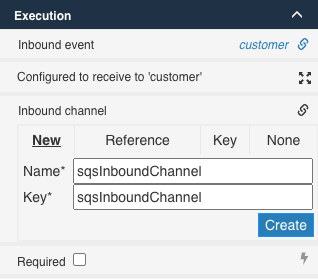

Save both models. Now go to the case model and select the event listener. Click the Inbound channel property, select new and type sqsInboundChannel as name. Then click the Create button:

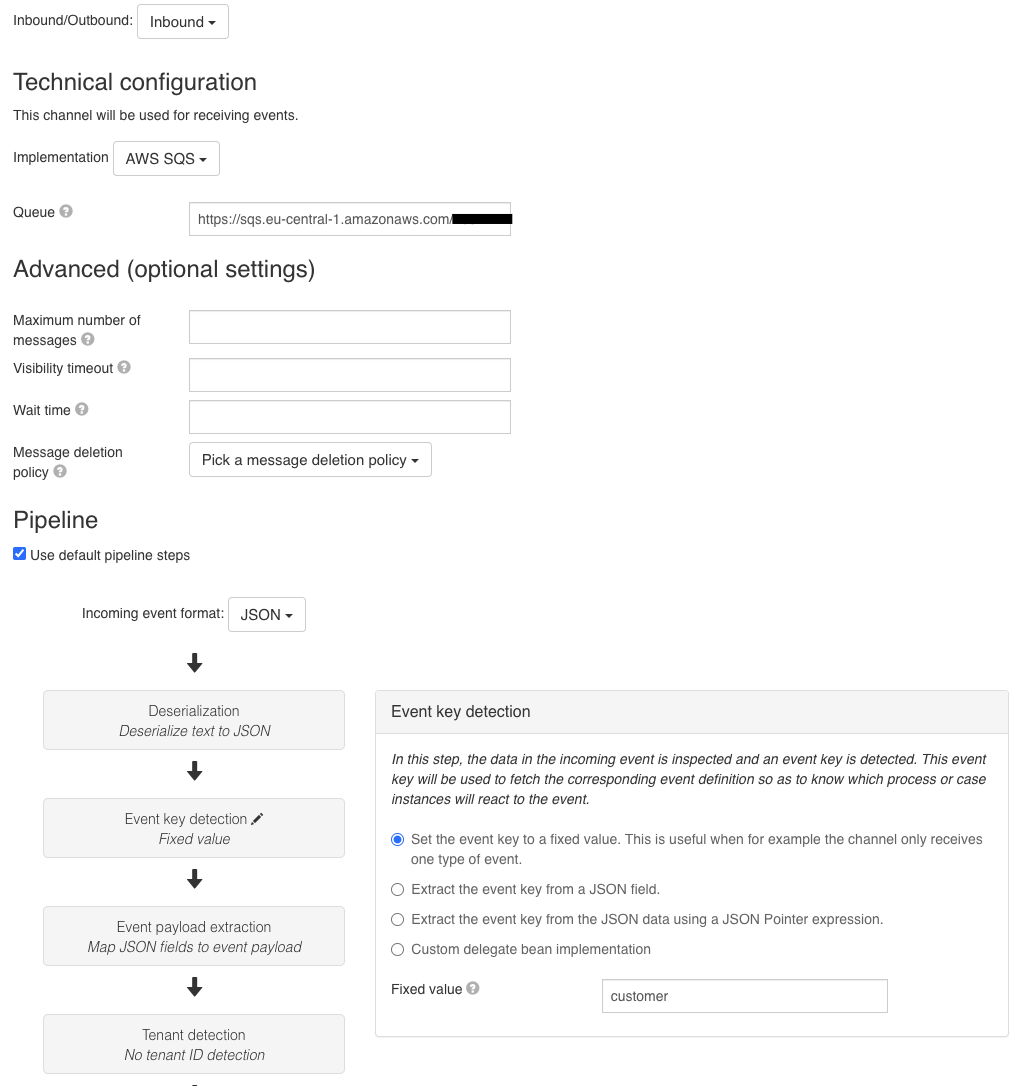

The channel model editor will now open. To configure our inbound channel, following settings need to be configured:

- The

Implementationfield needs to be set toAWS SQS. - The

Queuefield needs to be set to the URL of the SQS queue, as we're listening to the same queue as we're sending to. - In the

Pipelinesection, select theEvent key detectionstep. In theFixed valuefield, typecustomer. This configures the incoming channel pipeline to mark each event coming in with the event keycustomer(which is the key we used before for the event model). We can leave all other steps of the pipeline as-is, the defaults are ok (it assumes JSON as payload format and a direct mapping from the json to the event fields with the same name).

If you want to learn more about all configuration options and the various steps of the channel pipeline, head towards the advanced guide on channel and event models

Deploy the app model now.

- Start a case instance, as in this setup we'll capture all events in the same case instance.

- Now start a process instance, select the

Gather Datatask, fill in the form and click theCompletebutton.

Now go to the case instance. You'll see that a new task has been created. When going into its details, you can see the event data has correctly been sent to the queue and has been received:

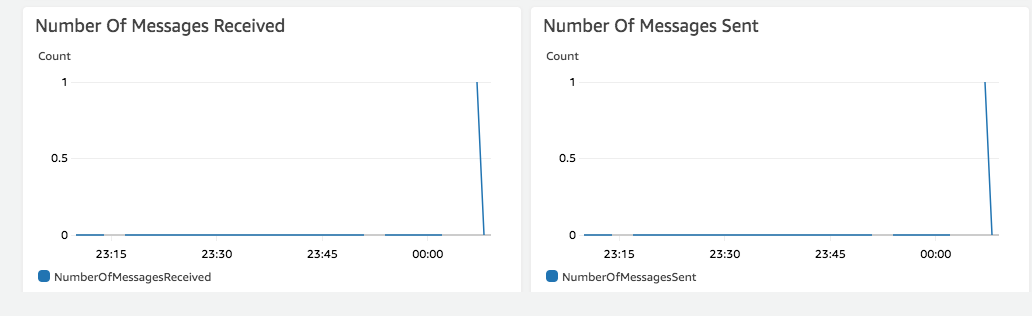

Checking if the message has been passed over the SQS queue can be verified in the Monitoring tab on the SQS page, where one message should be received and one message should have been sent:

AWS SNS Implementation

The AWS SNS (Simple Notification Service) integration is by default disabled. To enable it, following properties need to be set in the application.properties configuration file:

application.aws-sns-enabled=true

flowable.aws.region=eu-central-1

The region property needs to be set to the correct AWS region where the SNS topic is running. The correct value can be retrieved from the AWS management console.

The AWS SNS dependencies are by default added when using Flowable Work/Engage. No need to do anything in that case.

However, if you're building your own Spring Boot project with Flowable dependencies, you'll need to add the flowable-spring-boot-starter-aws-sns dependency to your pom.xml:

<dependency>

<groupId>com.flowable.core</groupId>

<artifactId>flowable-spring-boot-starter-aws-sns</artifactId>

</dependency>

To be able to follow along here, you'll need an SNS instance.

Creating an SNS topic on AWS:

First of all, you'll need an AWS account for this. Create an account or log in through the AWS Management console.

To be able to connect to the SNS topic, you'll need credentials to connect to it. There are various ways of accomplishing this. Here we'll follow the best practice (at the time of writing) to use an IAM user with only SNS permissions, an access key id and a secret access key specific to the IAM user.

After logging into the AWS Management console, select your username dropdown at the top-right and click on My Security Credentials.

From those screens, create a new group with SNS permissions only. Then create a new user and associate the group with it. Copy the access key access key id and a secret access key or generate one if none exists.

On your local system, create a new folder .aws in your user home. Create a file named credentials in it with following content, replacing the placeholders below with the actual values:

[default]

aws_access_key_id=<access_key_id_value>

aws_secret_access_key=<secret_access_key_value>

In the AWS Management console, go to or search for SNS. On the next screen, click the Topics on the left side menu.

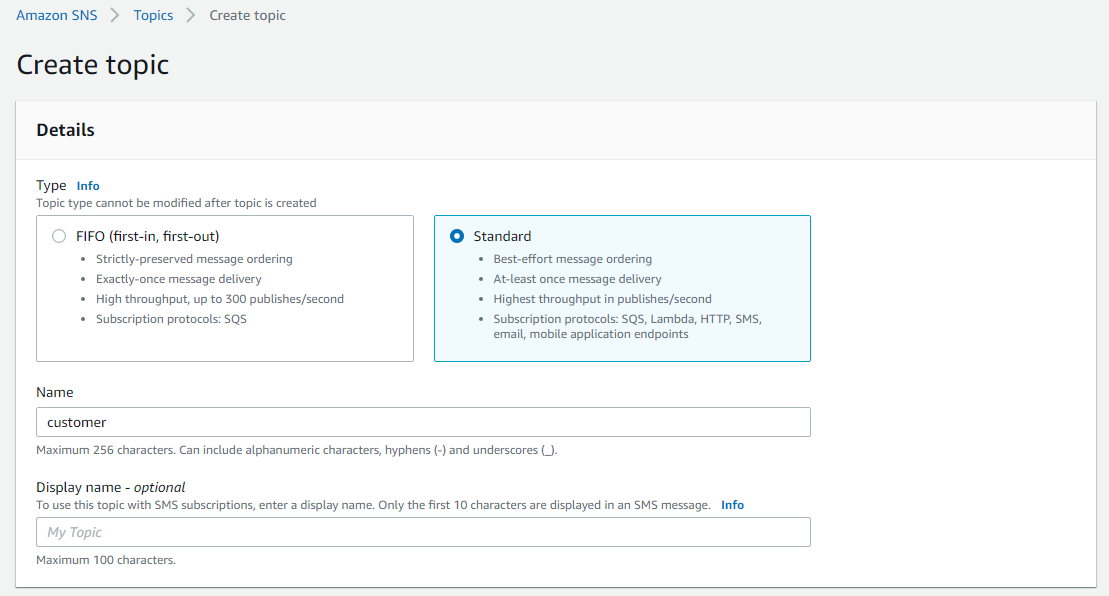

On the next screen, click on Create topic

In the creation details, select Standard and set the name to customer.

The flowable SNS outbound channel implementation also supports FIFO topics.

For the Access Policy, select twice the Only the specified AWS accounts option and copy paste the arn:... value from the IAM user created above:

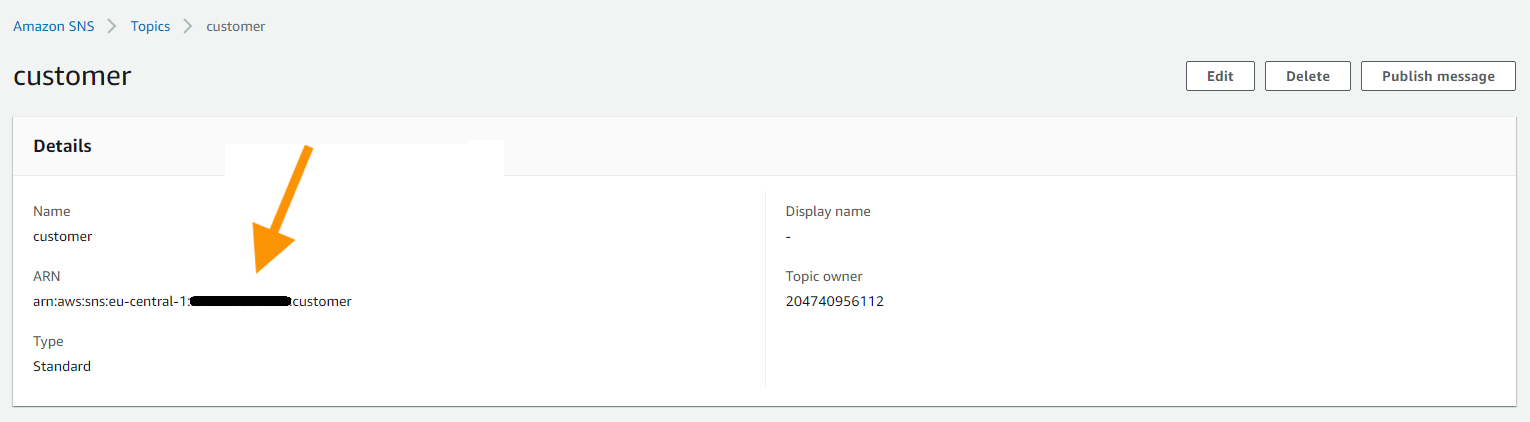

Finally, click Create topic at the bottom of the page. This will create the topic and give it a unique ARN. Copy this ARN, as we'll use it in the channel model below:

SNS and SQS channel implementations share the same user. So provide the user with the least permissions as possible.

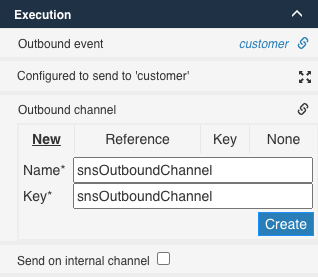

Open the BPMN process model created earlier and select the Send event task. Click the Outbound channel property, select new and type snsOutboundChannel as name. Then click the Create button:

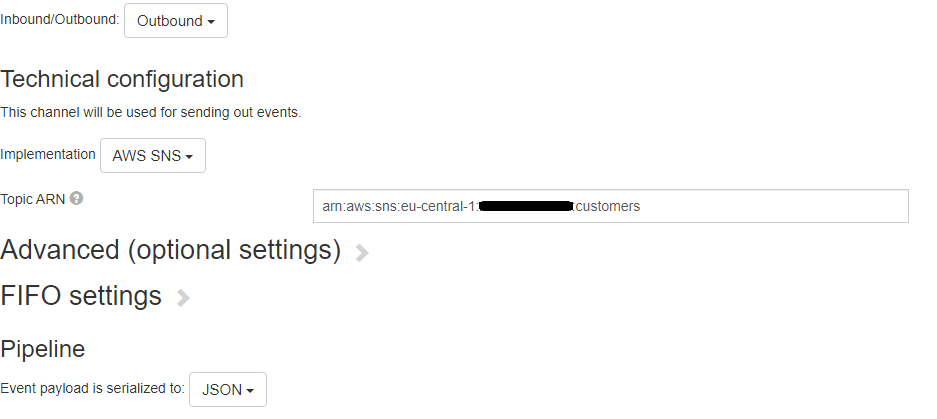

The channel model editor will now open. To configure our outbound channel, we need to only set three things:

- The first dropdown needs to be set to

Outbound. - The

Implementationfield needs to be set toAWS SNS. - The

Topic ARNneeds to be set to the SNS Topic Arn.

The SNS channel implementation does not support inbound AWS SNS channels. If you want to learn more about all configuration options and the various steps of the channel pipeline, head towards the advanced guide on channel and event models

Deploy the app model now.

- Start a process instance, select the

Gather Datatask, fill in the form and click theCompletebutton. The message get's pulished and the subcribed services will get notified.

Checking if the message has been passed over the SNS topic can be verified by subscribing via EMail or SQS Queue on the AWS SNS page