Agent Introduction

Flowable AI Agents enable the execution of intelligent tasks using large language models (LLMs), APIs, and internal knowledge. They are configurable components that perform classification, orchestration, data extraction, and external interactions based on your use case.

Please see the AI introduction for more details.

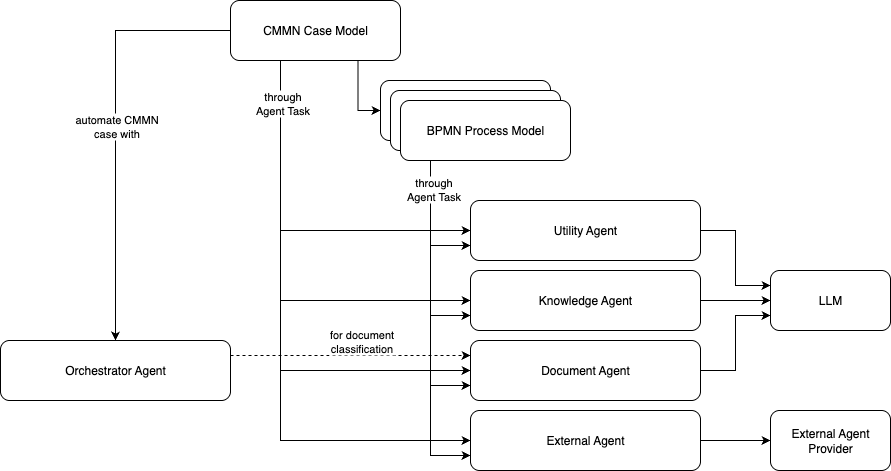

Agent Overview Diagram

Agent Types

Flowable provides several types of agents, each designed for specific scenarios:

- Utility Agent: Executes simple LLM prompts with defined input/output, either structured or unstructured.

- Orchestrator Agent: Coordinates multiple agents and external APIs. Typically invoked from a CMMN case model.

- Knowledge Agent: Uses a knowledge base model to retrieve contextual information at runtime.

- Document Agent: Classifies documents and extracts structured data. Often used as part of an Orchestrator Agent.

- External Agent: Connects to third-party agents such as Salesforce Agentforce or Azure AI Foundry.

- A2A Agent: Connects to third-party agents using the Agent2Agent (A2A) Protocol.

Linking to Model Settings

Each agent can be configured to use a specific LLM model or endpoint through the model settings.

This allows fine-tuning behavior across different agents, including prompt customization and output structure.

See: Model Settings Configuration

How to Start an Agent

In a process or case

- Orchestrator Agents are started as part of a CMMN case model.

- All other agent types are used in a BPMN or CMMN model using the AI Agent Task:

REST API

- All agent types, except the orchestrator agent, may also be initiated via the Flowable REST API if the REST API is enabled in the agent model.

- Orchestrator agents are invoked through the case, which means that the CMMN API can be used to start the agent.

Auditing Agent Activity

All agent executions can be audited and stored in the Flowable database. Audit logs are accessible through Flowable Control and include request/response metadata for transparency and debugging.

Asynchronicity & transactionality

The orchestrator agent operations will make sure that AI service invocations happen in a non-transactional way, meaning that database transactions are not open during the duration of this call. Only when the AI service returns a result, the related case instance will continue in a new transaction. See the engine implementation details for more information on this topic specifically for the orchestrator agent.

For the other agent types, the behavior can be modeled by using the standard 'asynchronous' flag found on tasks in BPMN / CMMN. When set, the behavior is slightly different from regular tasks, though. The corresponding asynchronous job will still be handled asynchronously in the background, but no database transaction will be created and the underlying AI service invocation will be handled without blocking database resources. If not set, this call will happen during the database transaction that has started the invocation (e.g. by completing a user task) and block the transaction for the duration of the call. This is detrimental for performance and general throughput.