Getting Started with Orchestrator Agents

Goal & Use Case

In this example, we’ll walk through the basics of building your first orchestrator agent to assist a case model. Along the way, we’ll also include other agent types to show how they work together in practice.

By following the steps on this page, you’ll gain an understanding of:

- How an orchestrator agent interacts with a case model.

- What intents are and how to model them.

- How to use AI activation to automate or suggest next steps in your orchestration logic.

By the end, you’ll have a working case setup that demonstrates how AI can dynamically enhance and guide execution, while keeping full control in your hands.

The example use case here is intentionally kept simple to focus on the core concepts, without getting lost in the complexity of real-world scenarios. We’ll use a super-simple loan application as our use case, something most people are familiar with at a high level. This allows us to concentrate on understanding how the orchestrator agent works, how intents are triggered, and how AI activation can streamline the case flow.

Setting Up The Case

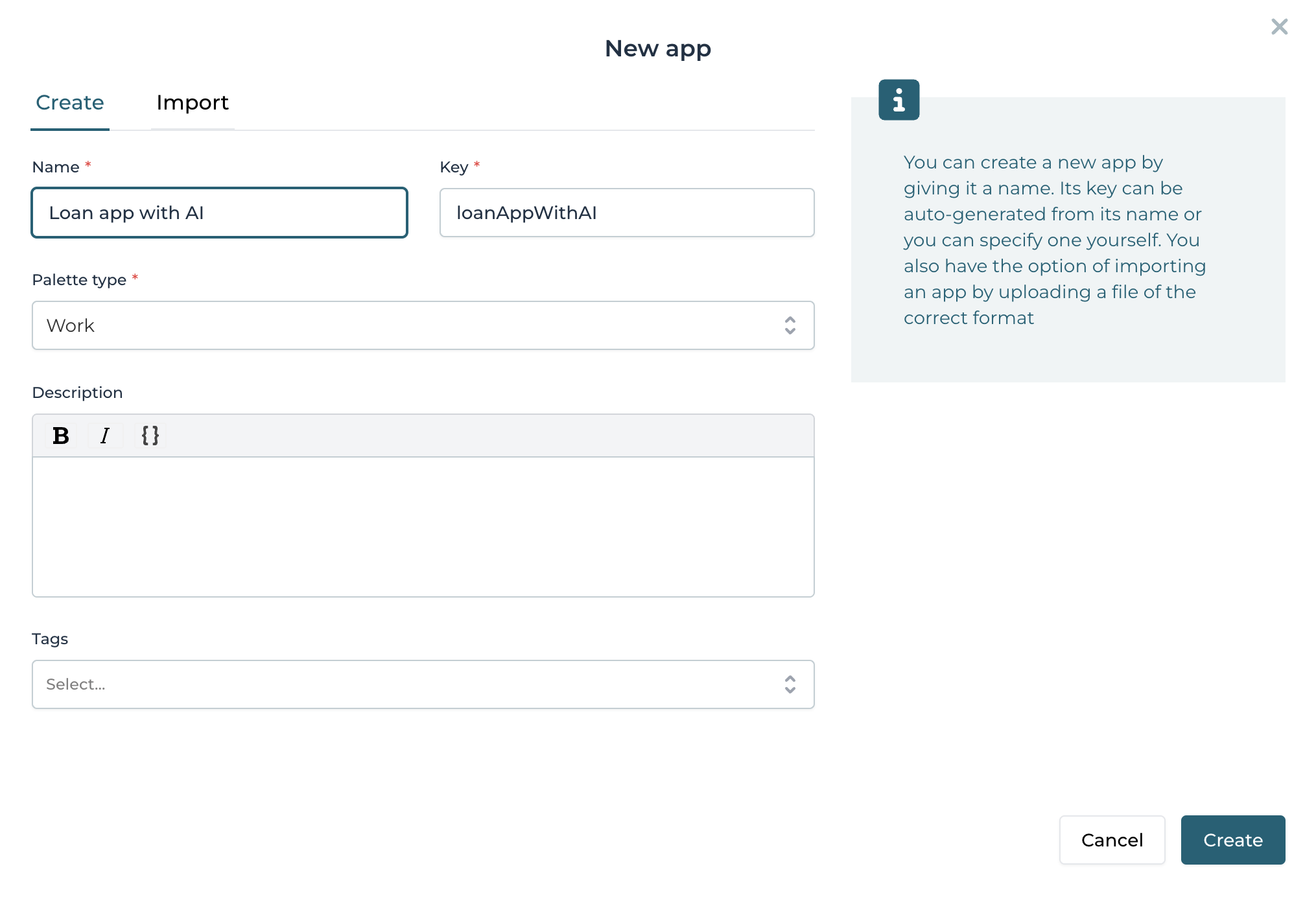

Let's create a basic app with a simple case model with a start form. In Flowable Design, create a new app in the usual way. Give it a name like Loan app with AI:

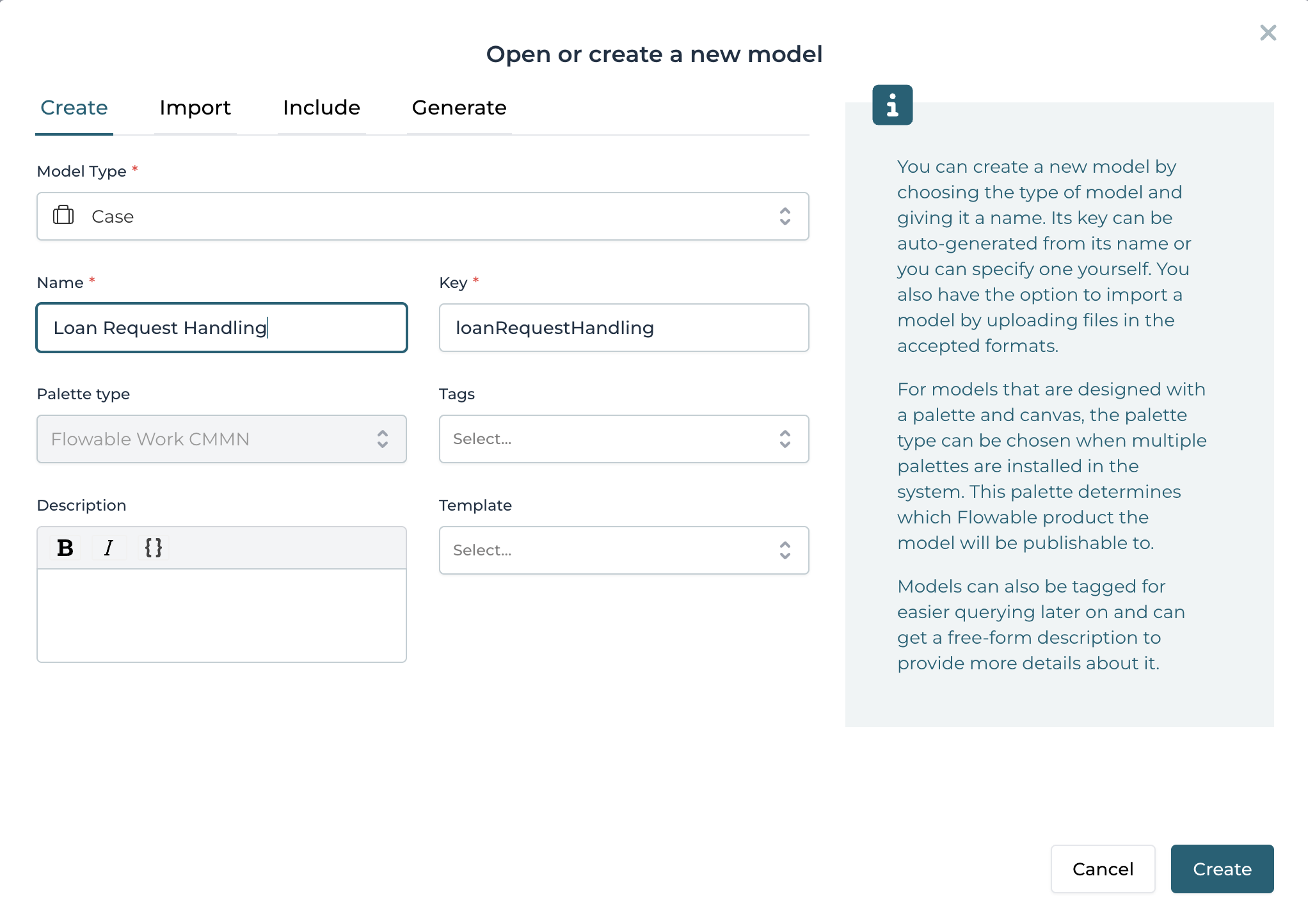

Create a new case model, give it a name like Loan request handling:

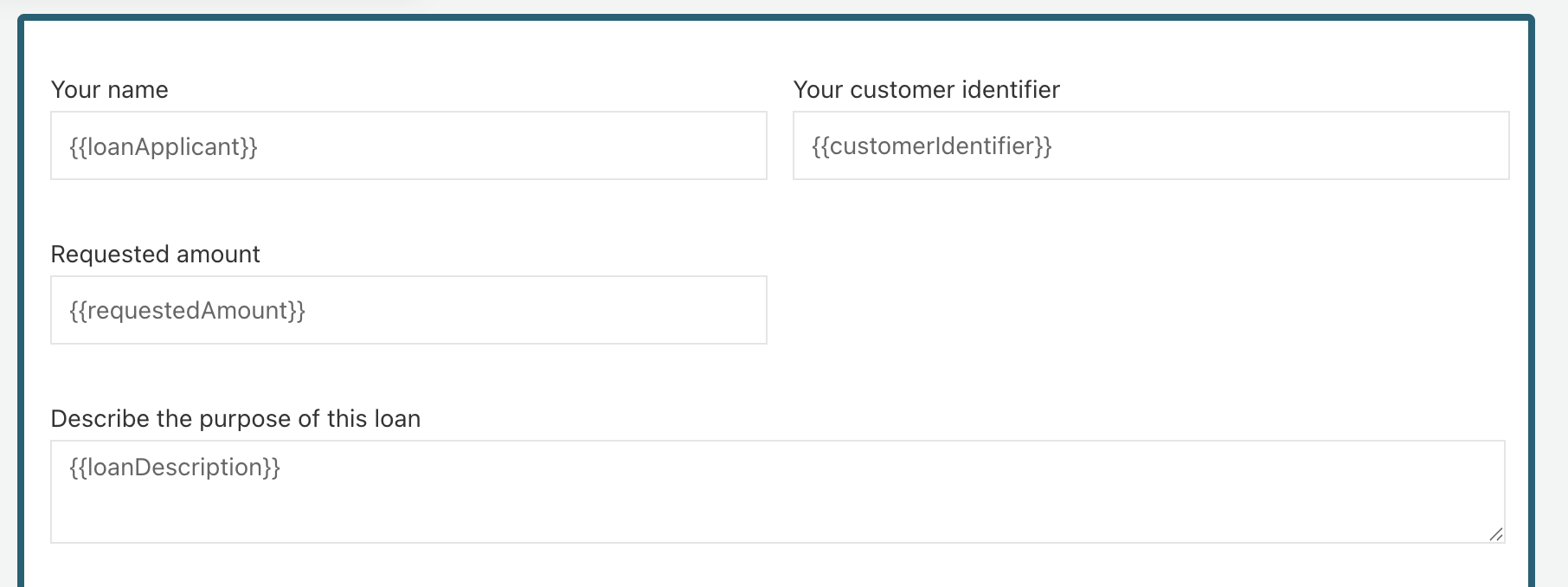

And finally, let's add a start form to the case that asks for the loan applicant name, some customer ID, the amount needed and a free-form description to give some more details:

Isn't this too simple?

Yes, of course. In reality requesting a loan is way more involved than what we're doing here. However, the goal of this page is to learn the basics of the AI features, not to give a full overview how a realistic loan request would be handled by a financial institute.

Linking an Orchestrator Agent

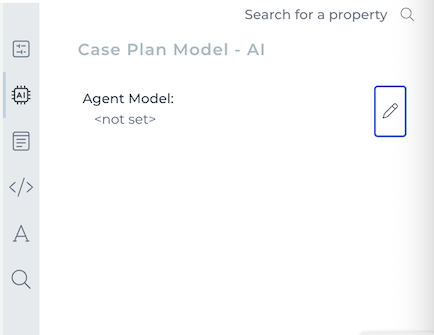

Let's add some AI into the mix. Click on the case plan model (the large rectangle), and select the AI tab. There, click on Agent Model property:

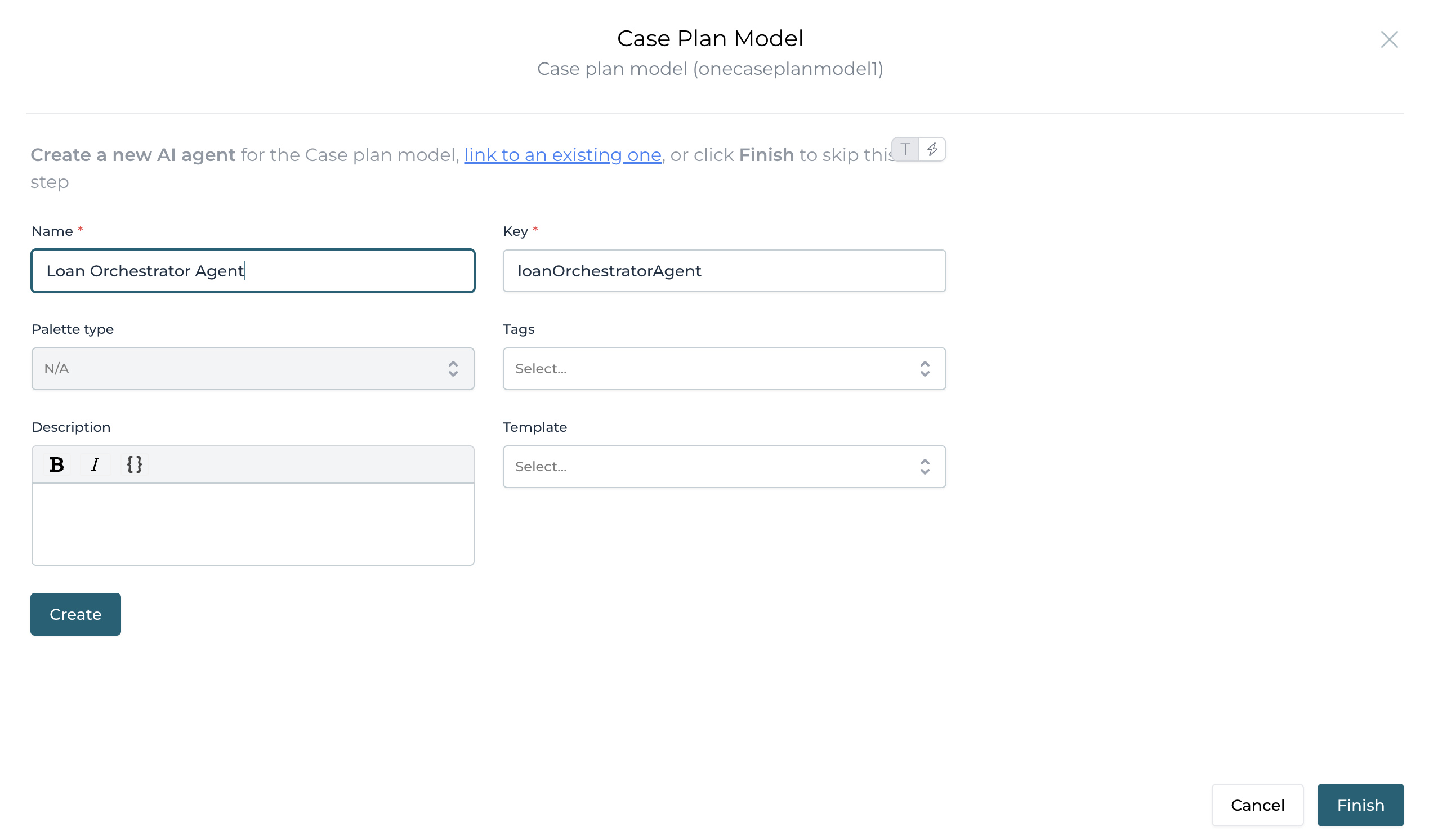

In the popup, create a new agent model like you would create any other model:

The agent model is now created and displayed:

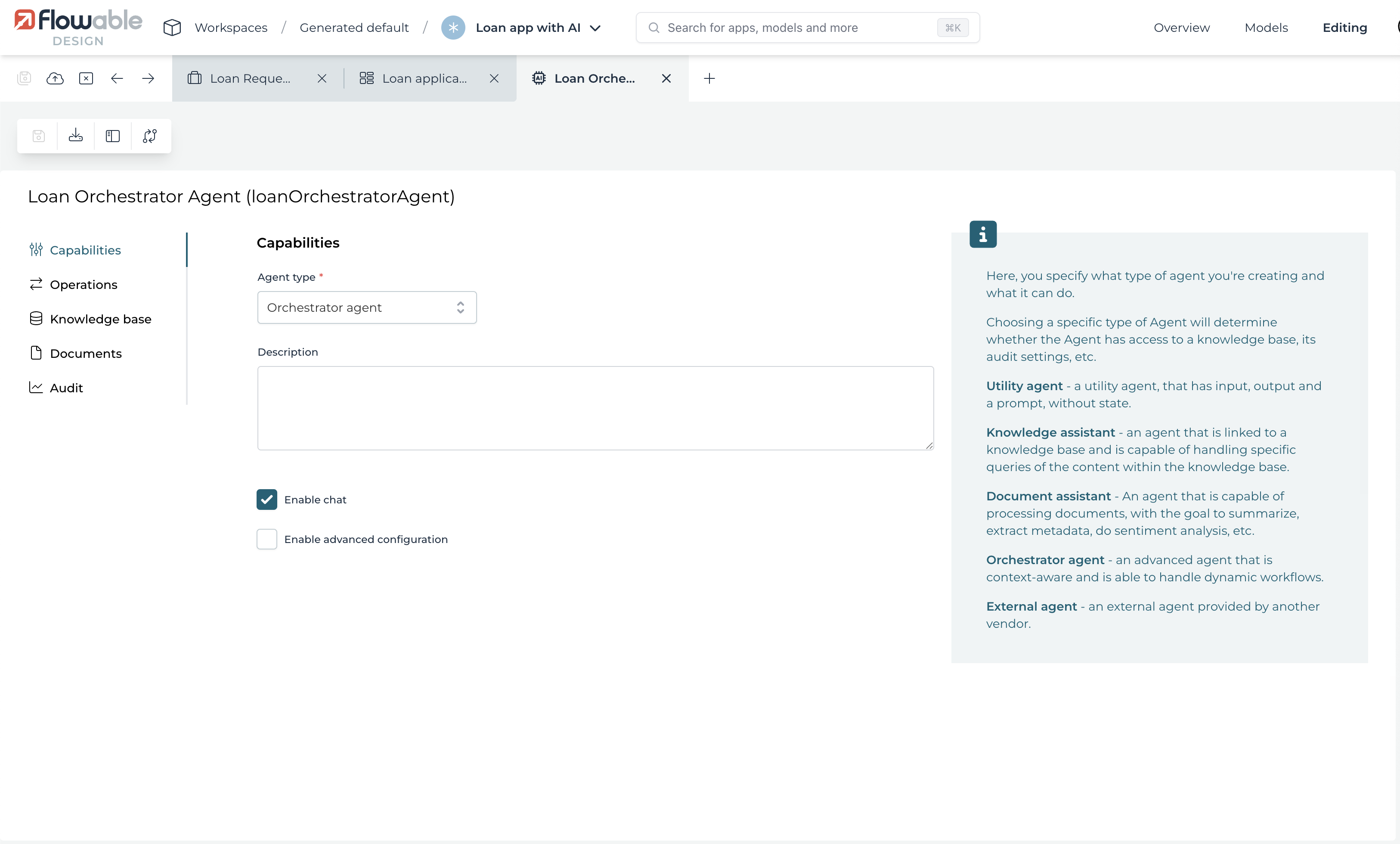

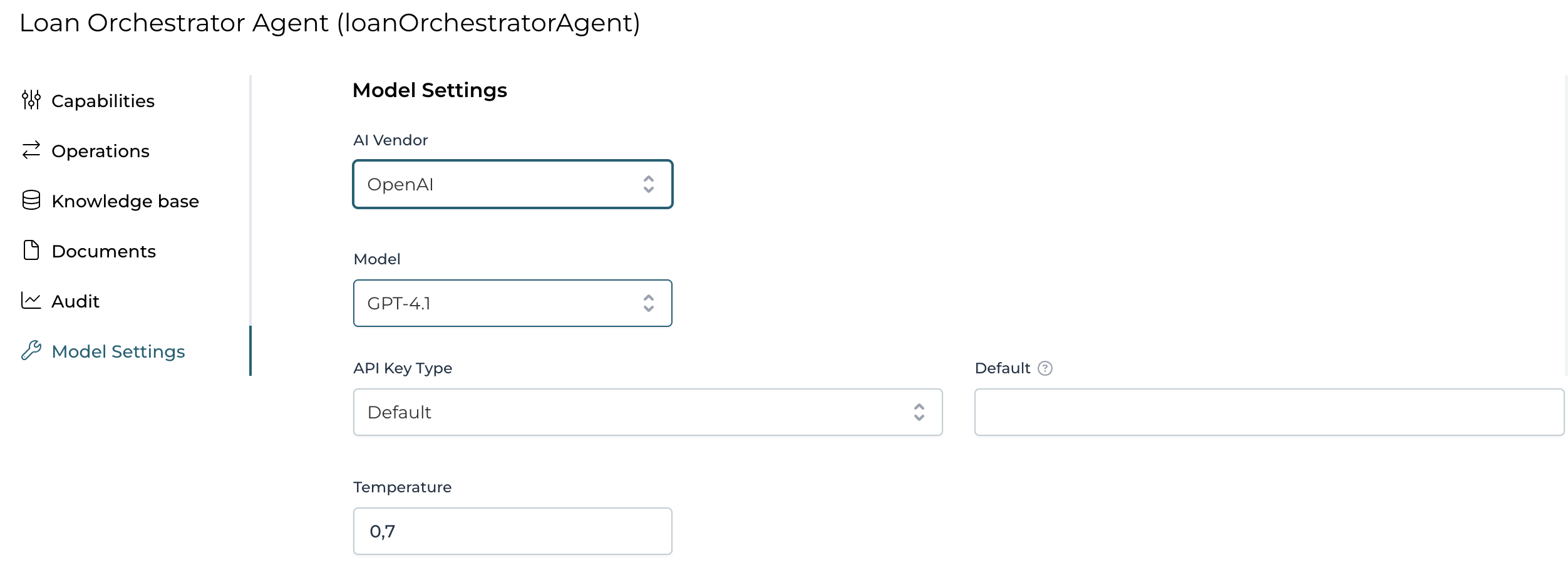

Clicking on the Operations tab on the left-hand side, shows that the agent already is prepoluted with a few default operations:

Chat: This operation will be used whenever a user is interacting with the agent over chat. The default is to be helpful and keep a professional tone.Intent detection: Used during the intent detection phase, which is described later in this page.AI activation analysis: Used during the activation phase, which will be applied in the next section.

Operations?

Each agent has one or more operations, where each operation defines a specific functionality along with its own input and output parameters and prompts. The configuration found in other tabs, such as the foundational model settings, applies to all operations within the same agent.

In some cases (though rarely needed), it's possible to override these default operations. This should be done with caution, as these definitions are used at the engine level and modifying them incorrectly can lead to broken or unexpected behavior in running models.

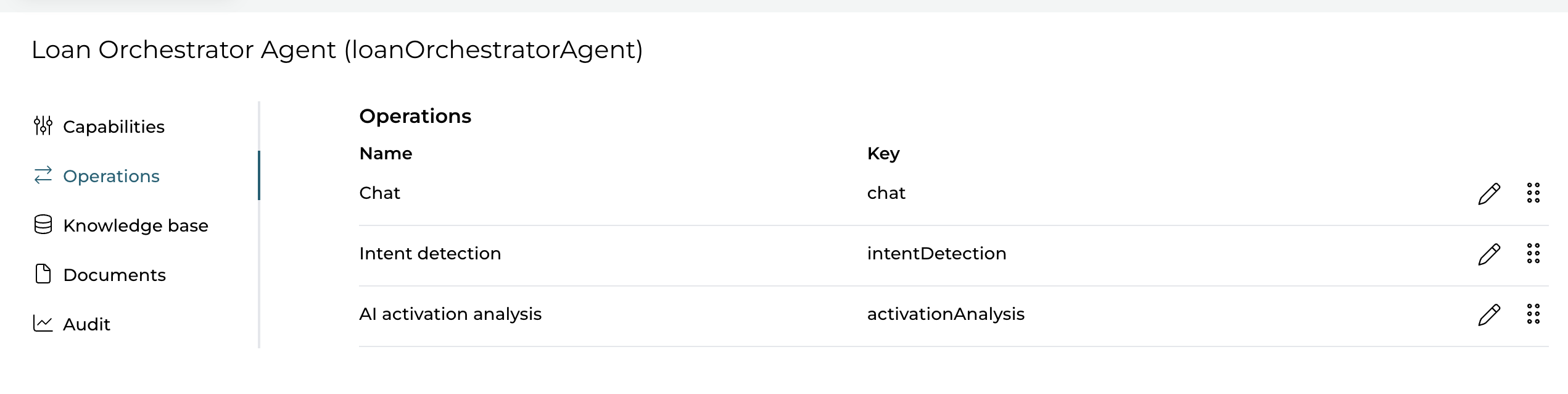

The agent needs a foundational model to function. Go back to the Capabilities tab and check the Enable advanced configuration flag. A new tab Model Settings now appears on the left-hand side.

Here, you can add a key and other settings, depending on the foundational model chosen.

Should I store the key in the model?

Generally spoken, no.

Whilst easy for demo purposes, we advise to use the Secret option instead of the Default for th Api Key Type and reference a secret key by identifier set in Flowable Control or Hub.

Alternatively, your system admin might have configured a fallback API key (and other settings) in Flowable Work that can be used by all users. In that case, the Enable advanced configuration can be left unchecked and this global key will be used automatically.

When the page was written, we were using OpenAI GPT-4.1 under the hood.

AI Activation

Suggestion Mode

Let's assume in our example insurance company, loan requests for real estate are handled differently from all the otgers. What we'd like to do now is use the information in the start form to determine whether this is a real estate request or not.

With the basics set up, let's add our first taste of AI to the model. Add two stages to the case model, and add a user task in them like shown below. Give both stages a fitting name like Real estate and Regular loan.

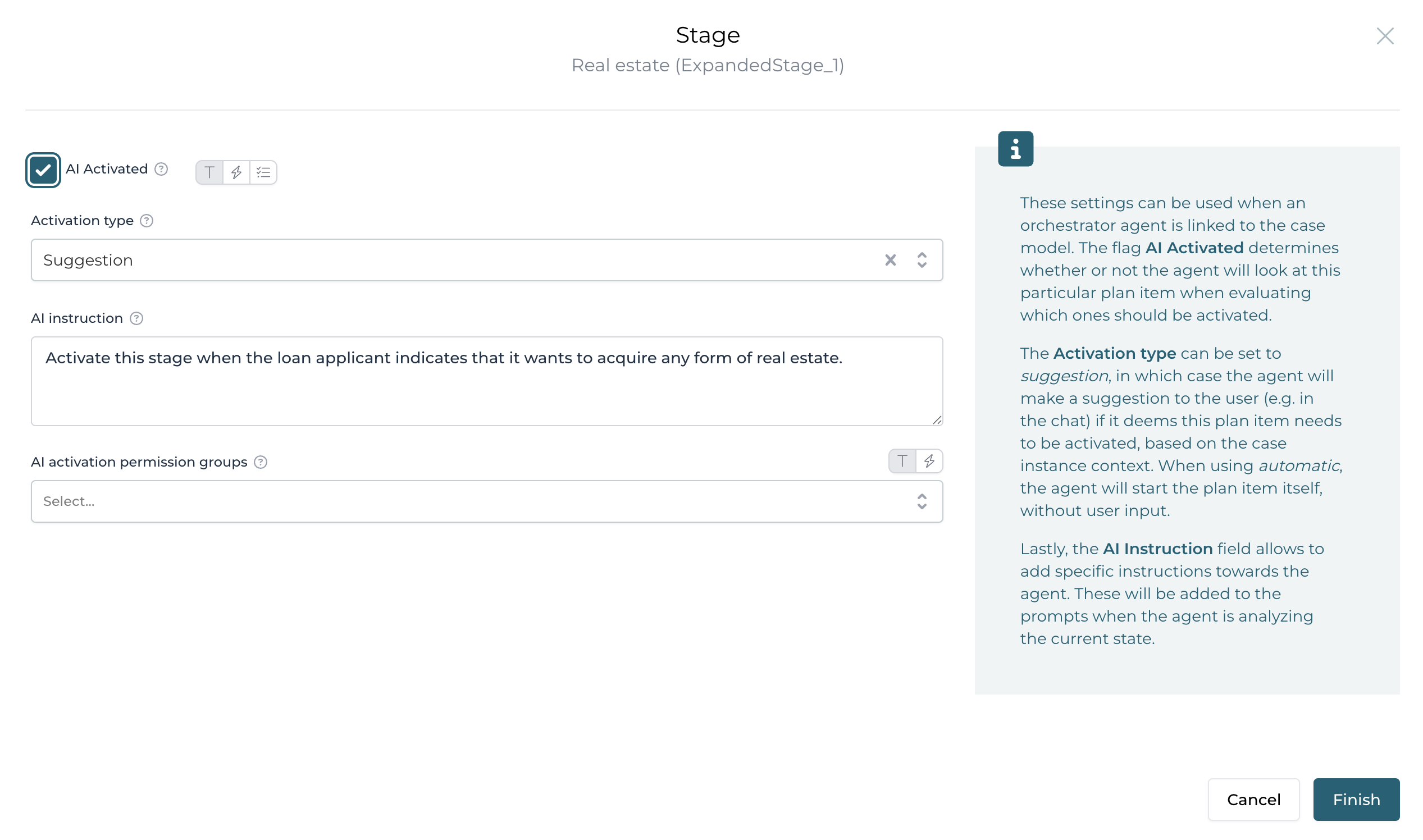

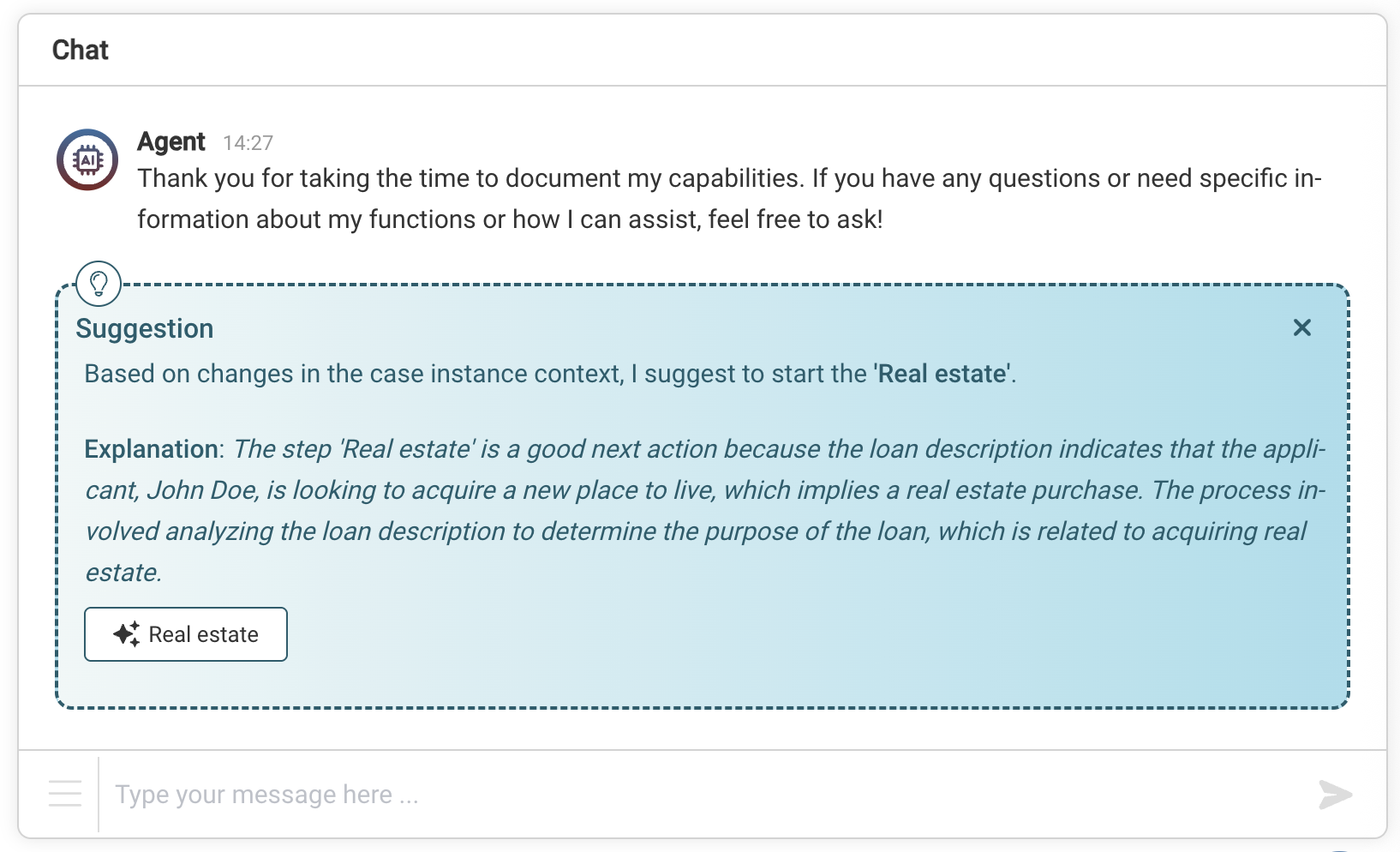

Click on your first stage, select the AI tab, and click on the AI Activation property. This opens up a popup which we fill in as follows:

- AI Activated: checked

- Activation Type: Suggestion

- AI Instruction: Activate this stage when the loan applicant indicates that it wants to acquire any form of real estate.

When doing the AI Activation analysis, the underlying models will receive (amongst others) the instance variables, the plan item names and any AI instruction set. What we've done now is clearly explaining when to activate this step when it is about real estate.

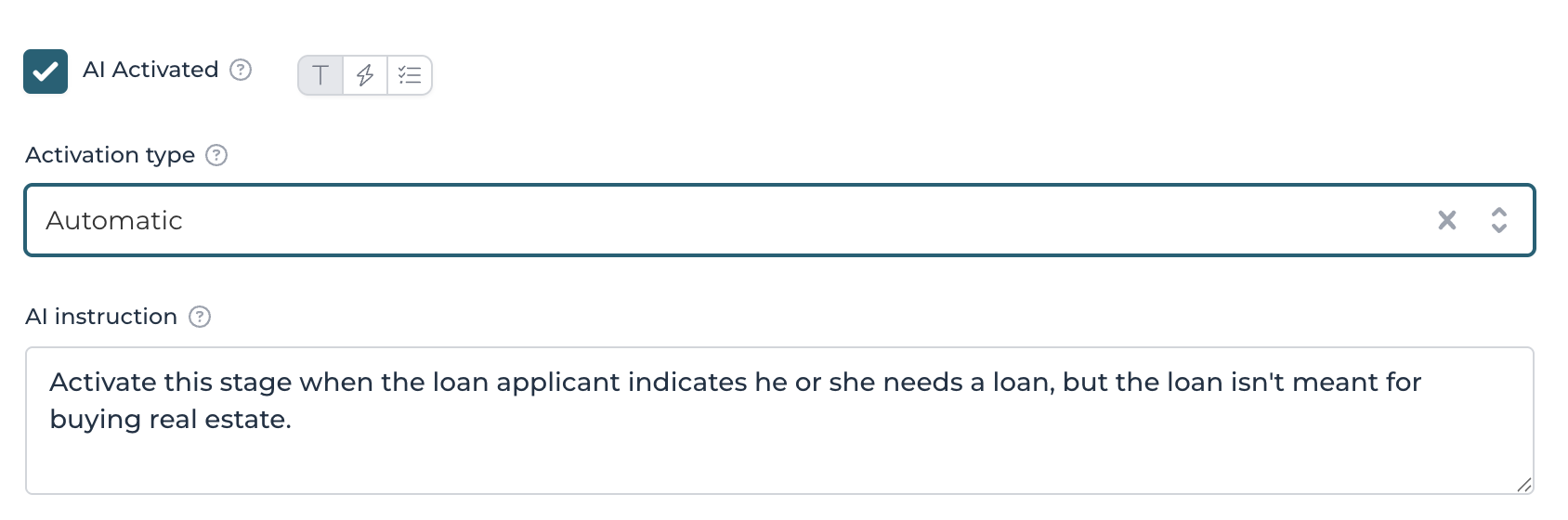

Fill in the second stage similarly:

- AI Activated: checked

- Activation Type: Suggestion

- AI Instruction: Activate this stage when the loan applicant indicates he or she needs a loan, but the loan isn't meant for buying real estate.

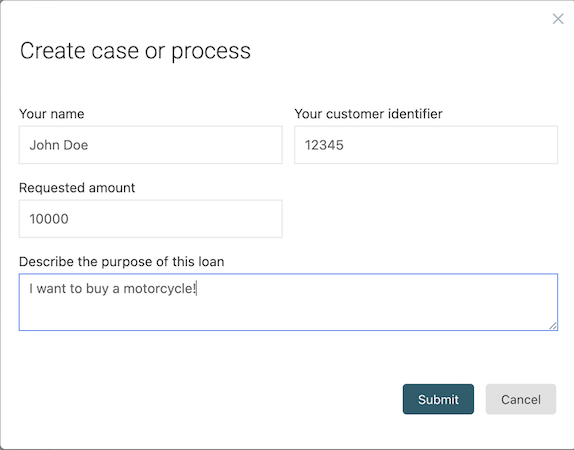

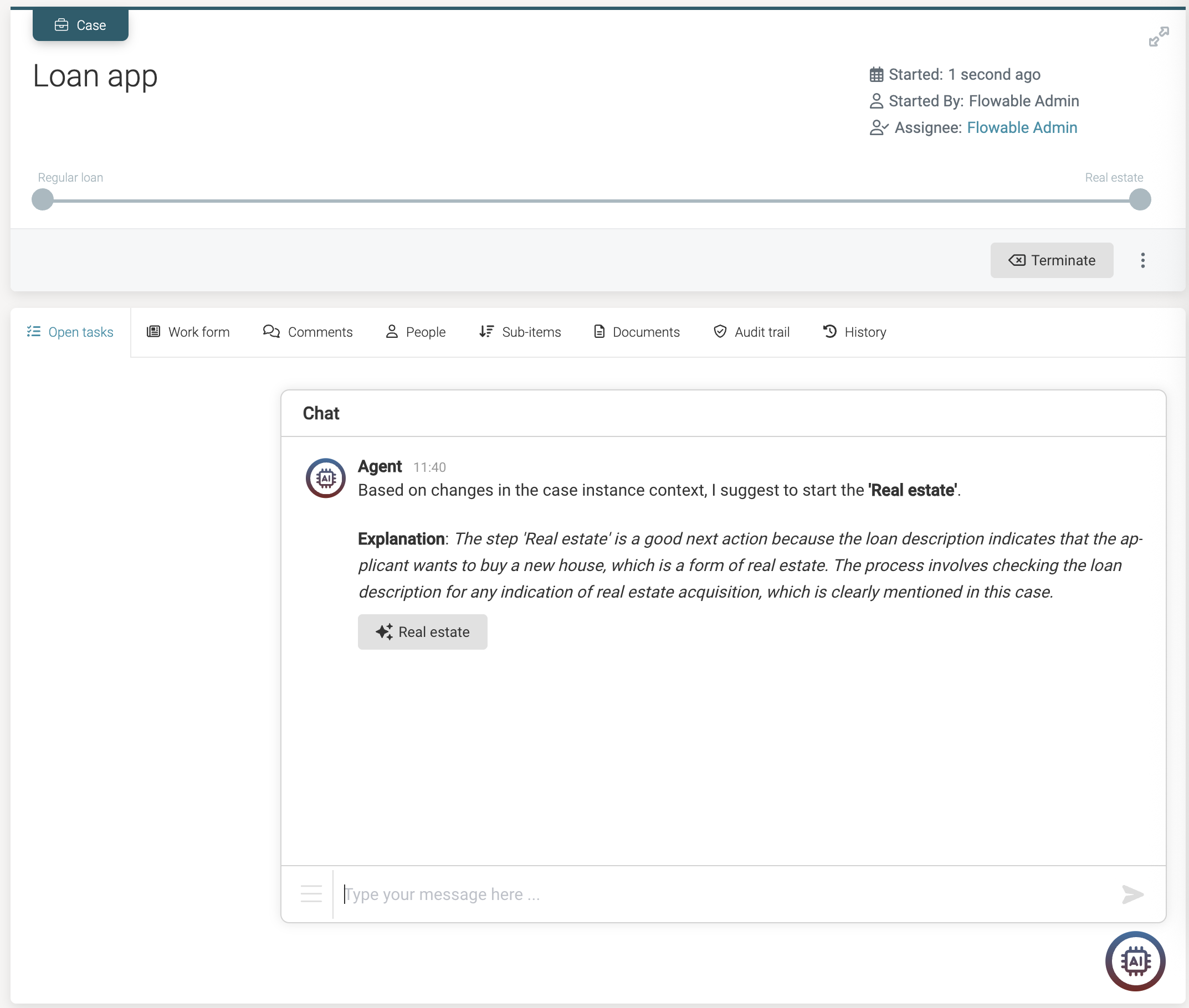

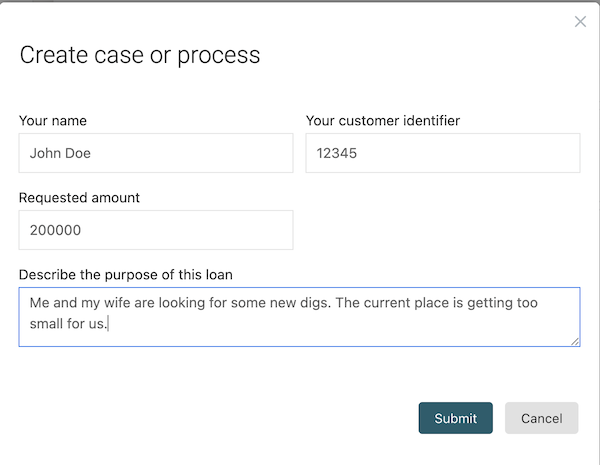

Let's see how this behaves at runtime. Publish the app and switch to Flowable Work. Start a new case instance for the model we've just built and fill in the start form fields:

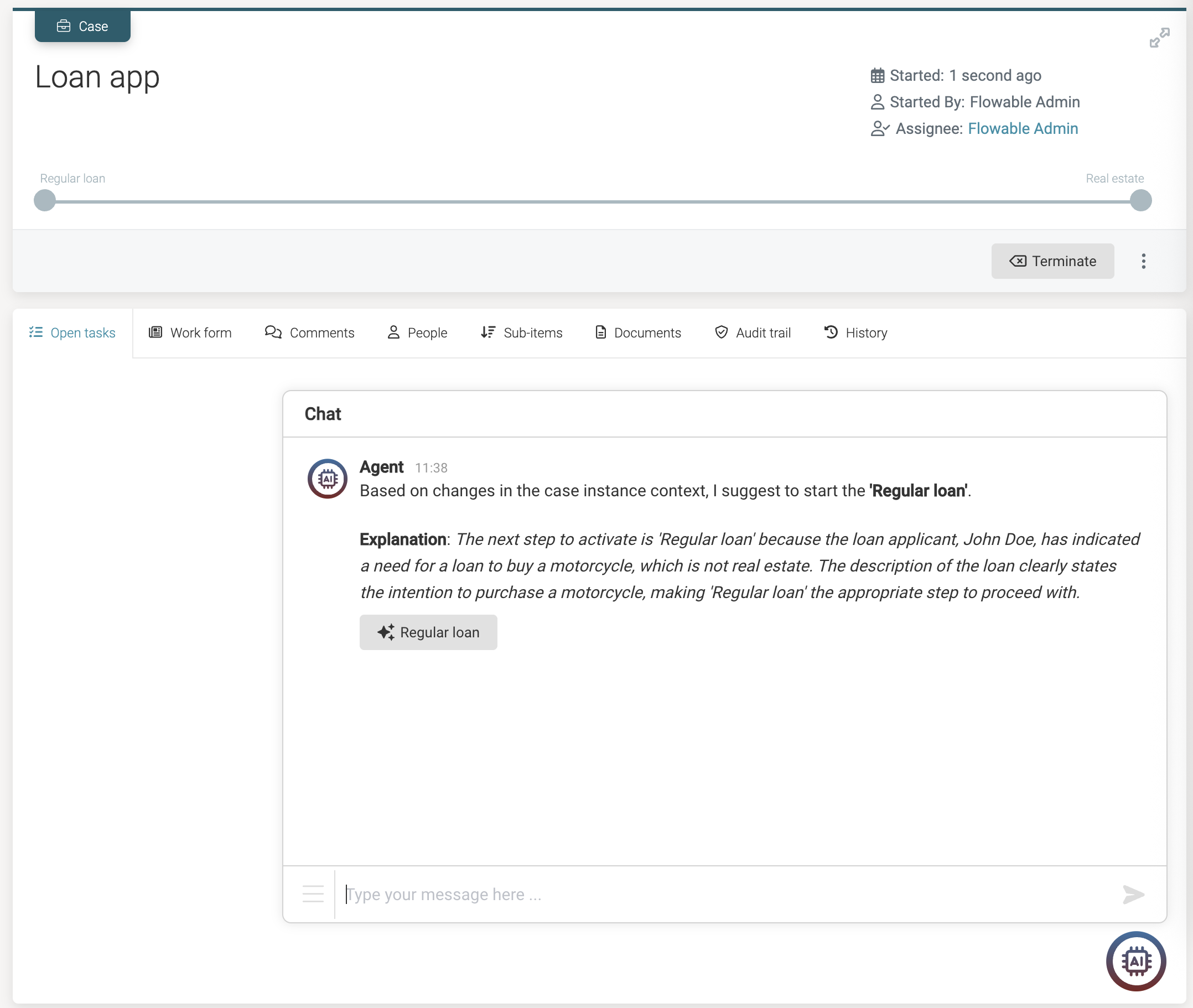

Because we've linked an orchestrator agent with chat capabilities (that's the flag Enable chat on the agent model) to the case instance, we can now see an AI button on the screen in the lower-right corner. Clicking it shows that the agent is suggesting to start the regular stage.

If you don't see anything, reopen the chat window. The orchestrator agents work asynchronously in the background, to avoid any inference with regular user actions in the user interface.

Clicking the button will make the stage active and we can see any step of the task now being activated, following the regular CMMN lifecycles.

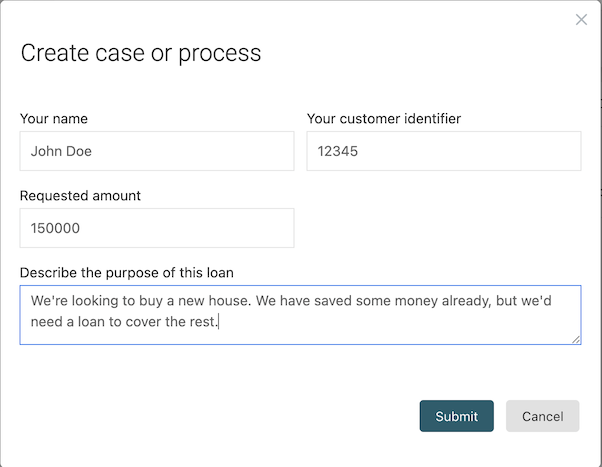

If we now start a new case instance, adding some details about buying a house:

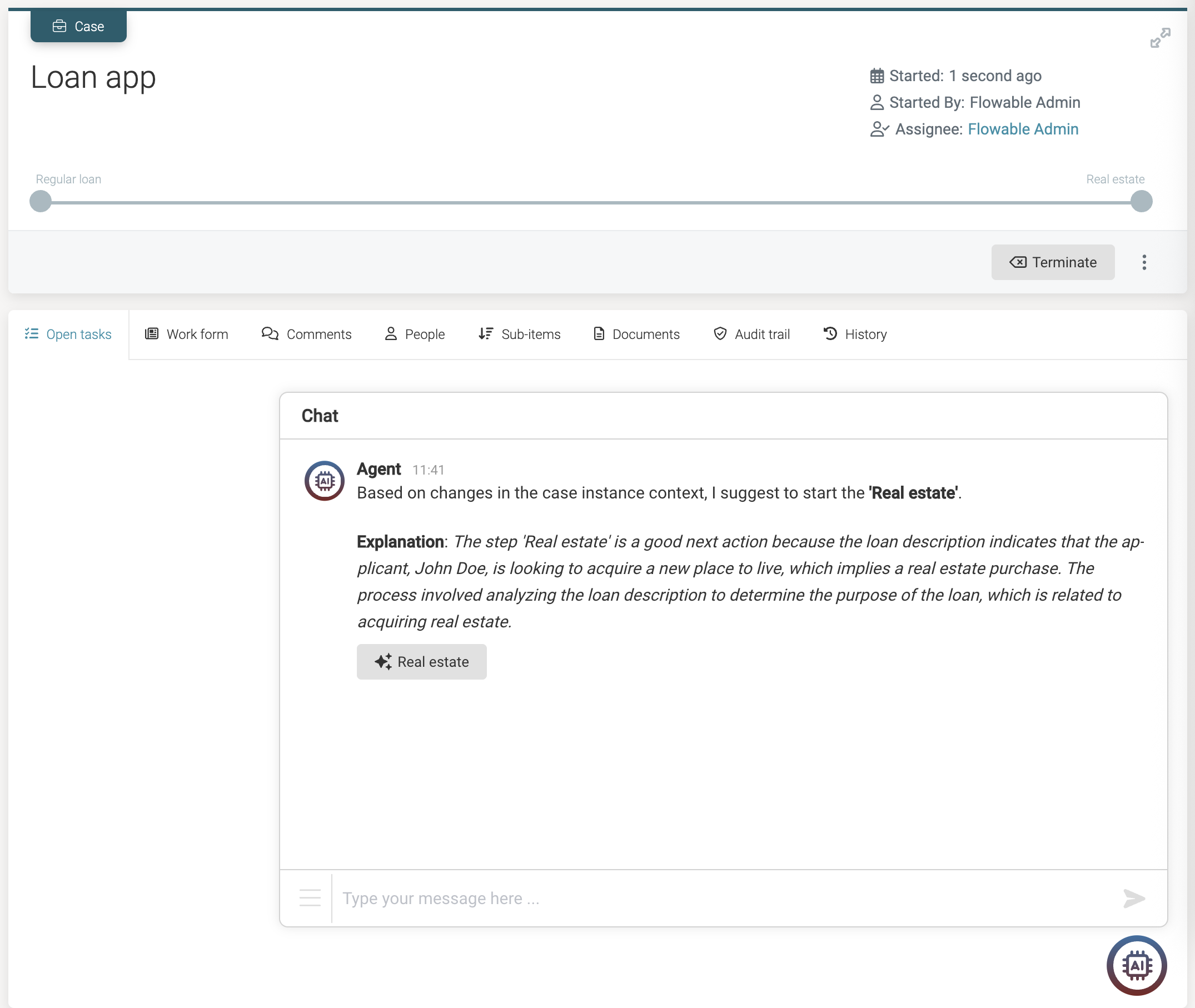

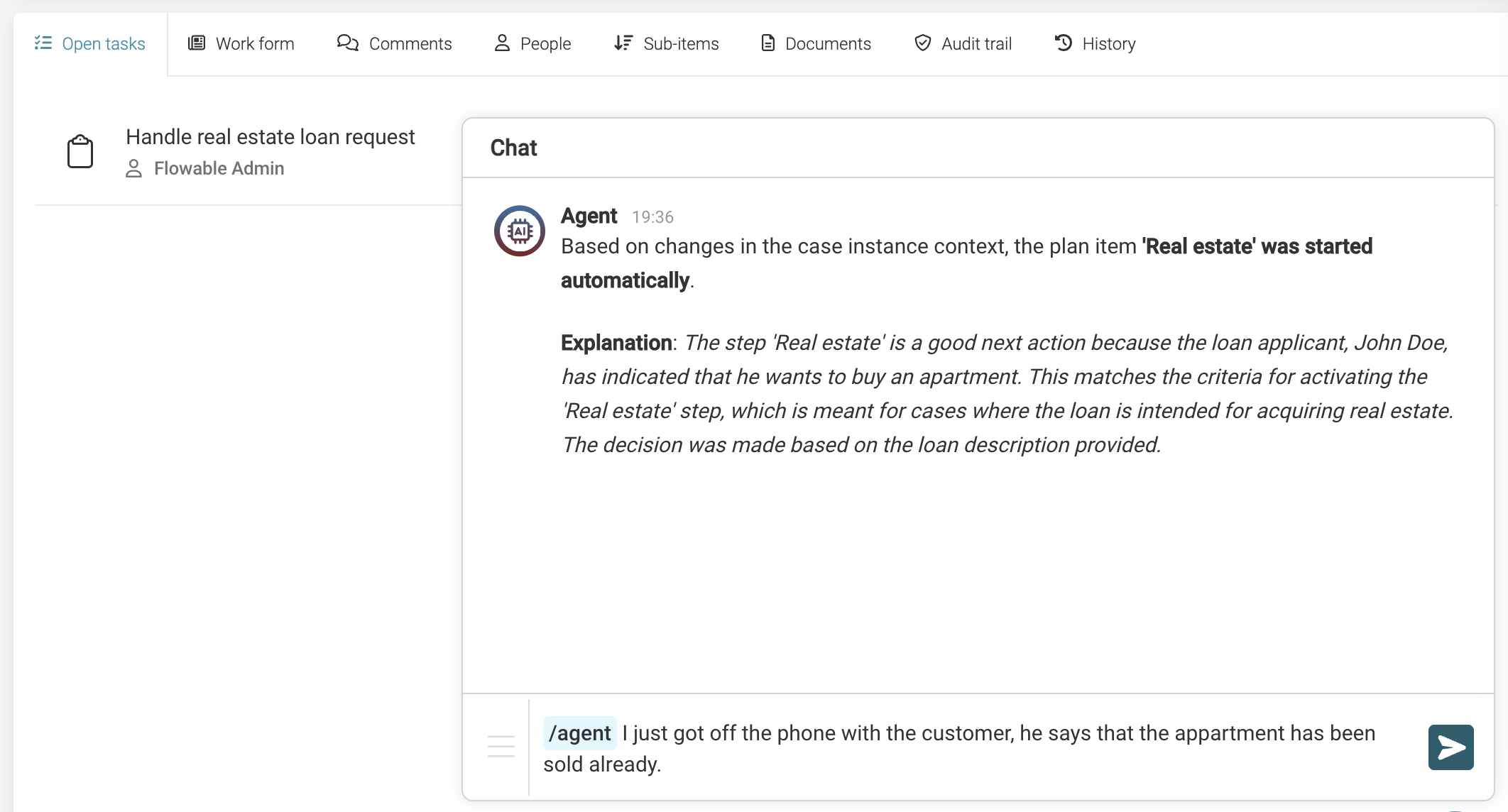

We can indeed now see the agent determined it's better to start the Real estate stage:

Why would I use AI for this?

When looking at this use case, you could wonder: does it make sense to use AI for this? Why not have a dropdown to select whether the loan request is about real estate or not?

And yes, that's a perfectly viable solution. In fact, it's what we would advise to do when building out this model if the only entry point is the start form.

However, in the real world, not everything is clean and perfect. What if the loan request comes in via email? Or through a banking app on the phone? What if the customer selected the right thing in the dropdown, but writes something else in the description?

For example, what if the customer filled in the description using some specific slang:

Interpreting natural languages is what these agentic systems are born for. Indeed, the agent has no problem understanding this is about real estate:

Given all the above, it thus becomes quickly apparant how adding a dash of agentic AI to case models can improve quality in many ways for various real-life use case.

Getting an Overview

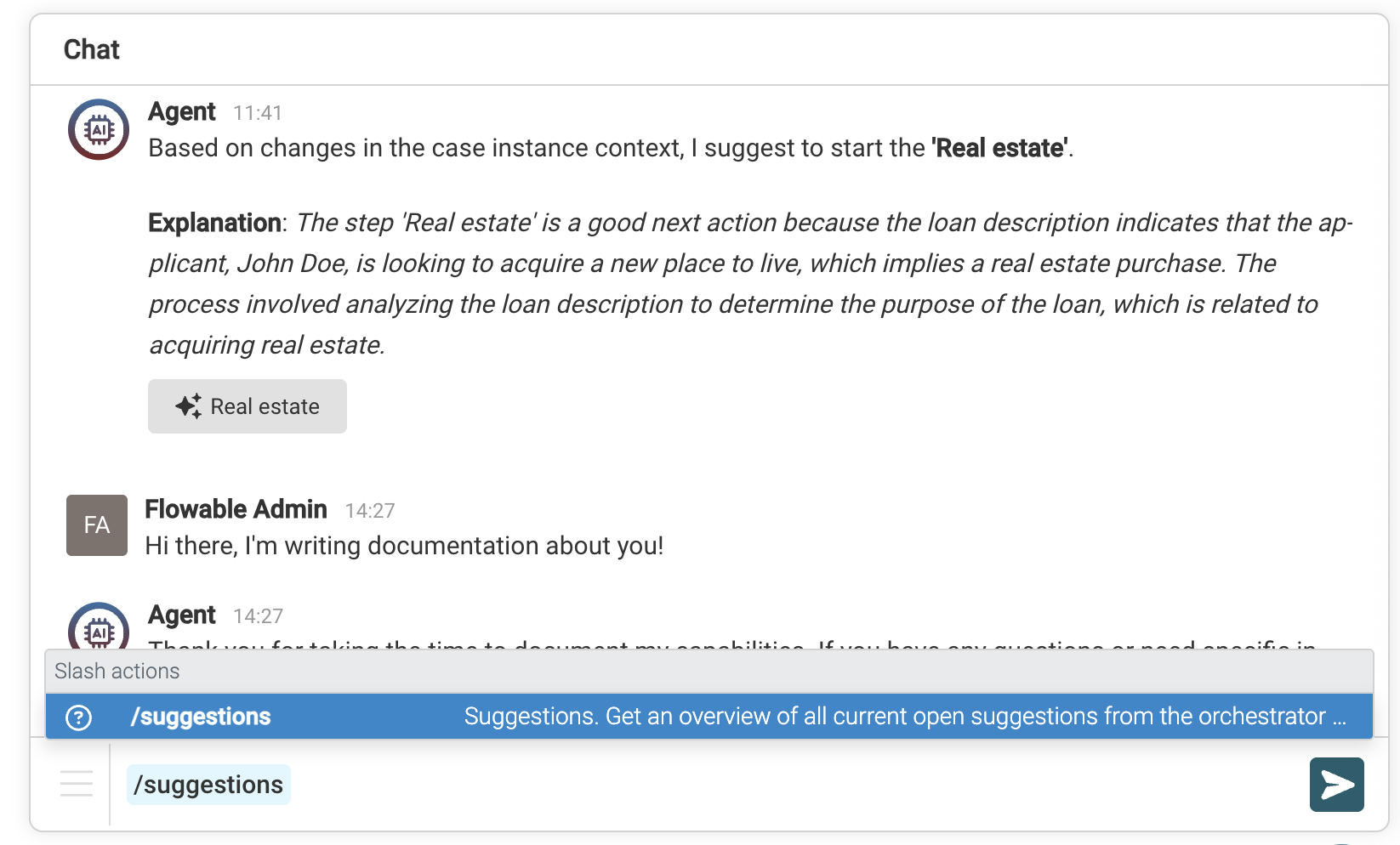

We've just covered an important first feature of the orchestrator agent, which is the ability to assist with suggestions that could drive the case forward. During the chat with the agent, you can always ask for an overview of all current suggestions by using a slash-action:

More specifically, type /suggestion and the agent will respond with an overview:

Automatic Mode

We've seen how the orchestrator agent can make suggestions, let's now give it more autonomy by changing both the stage's Activation Type to automatic:

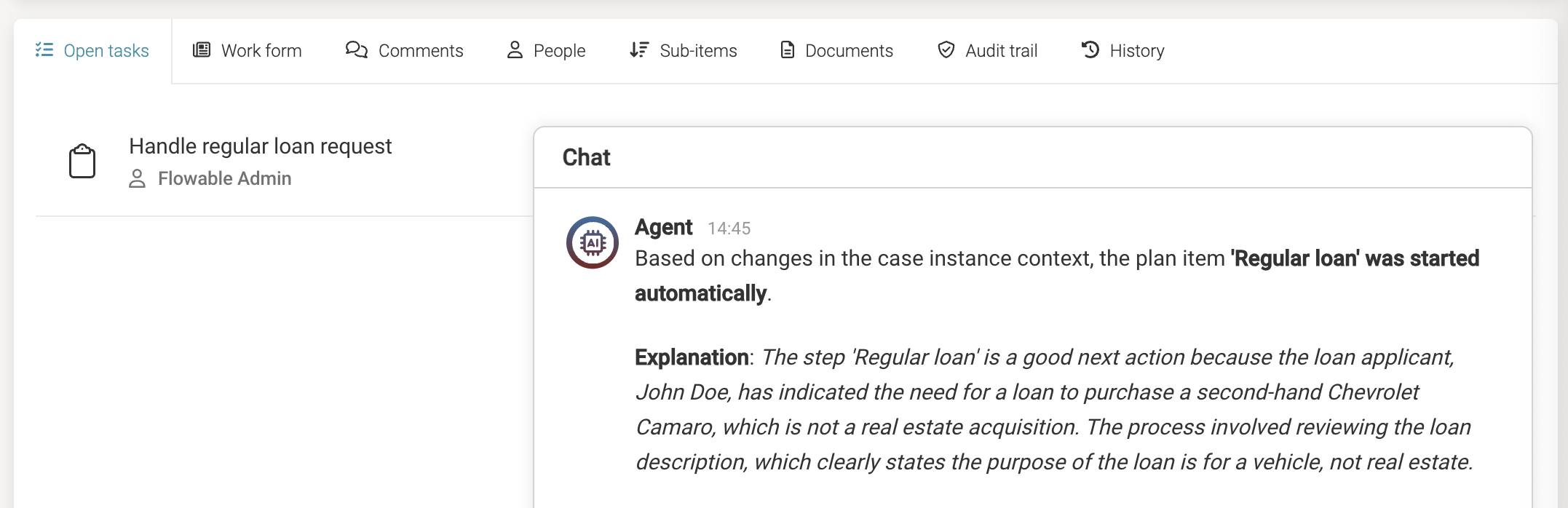

Publush the app and start a new case instance, using a new text or reusing the ones from above. You'll notice that suddenly a user task will appear in the task list. This is because we allowed the orchestrator agent to automatically start the stage if it deemed it applicable:

Suggestion or automatic? What should I pick?

Good question.

And as often, the answer is 'it depends'.

When configuring an agent to either automatically start a step or merely suggest it, the right choice depends on a number of factors:

- Nature of the Task: Consider how critical the task is. For low-profile or low-impact tasks, automatic execution might be perfectly fine and can streamline the flow. But for high-stakes or sensitive decisions, it’s often safer to keep a human in the loop through suggestion mode.

- Experience and Confidence with AI: The more comfortable and experienced your team or organization is with using AI in production workflows, the more you may choose to rely on automation. For newer teams, suggestion mode allows for validation and gradual trust-building in AI-assisted decisions.

- Risk of Errors: It's well-known that large language models (LLMs) can make mistakes, this should always be considered when enabling automatic behavior. That said, it's also true that human decisions are not infallible either. The key is understanding and balancing the risk and potential consequences of errors, regardless of who or what makes them.

- Legal and Compliance Considerations: In some contexts, only a human may be allowed to make certain decisions, for legal, regulatory, or contractual reasons. Automatic execution would not be appropriate in such cases. In others, it may be acceptable if the action is reversible or doesn’t have critical impact.

- Impact and Accountability: Think about who is ultimately accountable. If a mistake could lead to legal exposure, financial loss, or harm to users or customers, suggestion mode is often the safer option. Conversely, if the action is minor, well-bounded, and low-risk, automation may make sense.

In short, there’s no one-size-fits-all answer. Use a combination of common sense, domain knowledge, and risk evaluation to guide your decision. A key strength of a platform like Flowable is that it doesn’t force a binary choice between full automation and full manual control. Instead, it provides a sliding scale of autonomy, allowing you to find the right balance for each specific use case.

Technical Details

Of course, we're now limiting the demo to a stage with one user task that gets started. In practice, you could have more stages, tasks or multiple processes start automatically or have it suggested to the user

When using AI Activation, keep in mind that it always runs at the end of any kind of action that interaction with a case instance. Whether the interaction is small (e.g., changing a variable value) or large (e.g., starting and executing multiple processes from start to end), the AI activation is always planned when the case instance is deemed to be stable. At that point, the relevant data is gathered, prompts are prepared, but the actual invocation to the underlying foundational model happens later, asynchronously and outside of the database transaction.

Intent Detection

Intent detection is the mechanism by which the orchestrator agent identifies if something meaningful has occurred in the recent context of a case, something that should trigger a response or a next step. This is not the same as AI Activation, though the two are closely related.

Intent detection is reactive: the orchestrator agent analyzes new inputs (such as messages or documents) and determines whether they match a known intent. AI Activation, on the other hand, is about deciding what to do next. It’s proactive: given the current context, the orchestrator agent evaluates which steps could or should be activated. In simple terms: Intent detection answers “Did something happen that needs attention?” while AI activation answers “What action should we take next?”

Intents are modeled using CMMN event listeners, meaning they follow the same lifecycle and behavior rules as any other event listener in a CMMN case model. Each intent event listener has a name and a prompt, which helps the underlying foundational model understand what kind of user input or data change it should watch for.

Currently, intent detection is triggered in two key situations:

- When a new chat message arrives, which allows for real-time analysis of user or system conversations to detect actionable intents.

- When a document is uploaded to the case instance, enabling the system to extract meaning or actions from structured or unstructured content.

Additional event sources will be supported in future releases as the orchestration capabilities evolve.

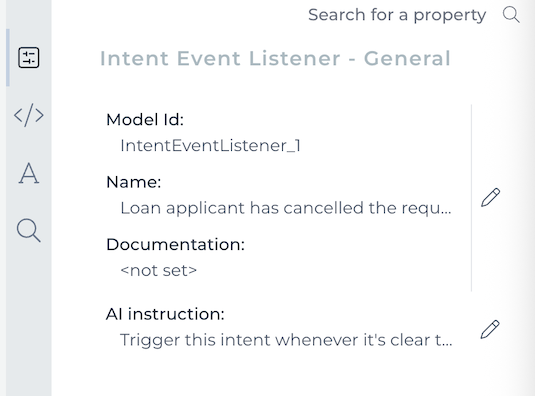

Let's add a simple intent to our case model: the loan applicant has decided to cancel the loan request. This could e.g. be over email, a phone call, etc. To do so:

- Select the Intent event listener from the palette and drop it on the plan model.

- Give it a clear name such as

Loan applicant has cancelled the request. - Connect the event listener with an exit sentry to both stages. Whatever the state is they're in, we want to stop anything that's going on inside. Now it's a user task, but this could be e.g. multiple processes that are running at that time.

- Connect the event listener with a new user task to handle closing the request. In reality, this probably will be done with one or more process task(s).

The case model should now look something like this:

The intent event listener has an AI instruction field that can contain extra details (e.g., here something like Trigger this intent whenever it's clear the loan applicant has cancelled.), however the name here is quite clear.

Should AI handle this?

This kind of behavior could certainly be modeled using a manually activated user event listener, for example. However, consider the scenario of a customer interacting through a banking app's chat interface. The customer could type anything: questions, complaints, off-topic remarks, and attempting to plan for every possible input with predefined UI elements or user events quickly becomes unmanageable. This is precisely where the intent detection pattern begins to shine. Instead of hardwiring every possibility, we delegate the interpretation to an AI model, allowing it to infer what is intended from natural, unstructured input.

Adopting this perspective shifts the modeling paradigm significantly. Rather than only modeling deterministic sequences of tasks, we now start thinking in terms of possible situations and how to handle them reactively. And thanks to the flexibility of CMMN, these potential situations can be elegantly expressed as event listeners, triggered when the right intent surfaces, without overcomplicating the overall model.

Publish the app and return to Work. Start a new case instance with some data, like before. Open up the chat again, and now use the /agent slash-action to interact with the agent. Don't worry yet about the difference between regular chat and this direct agent chat with the slash action, we'll cover that in detail in the next section.

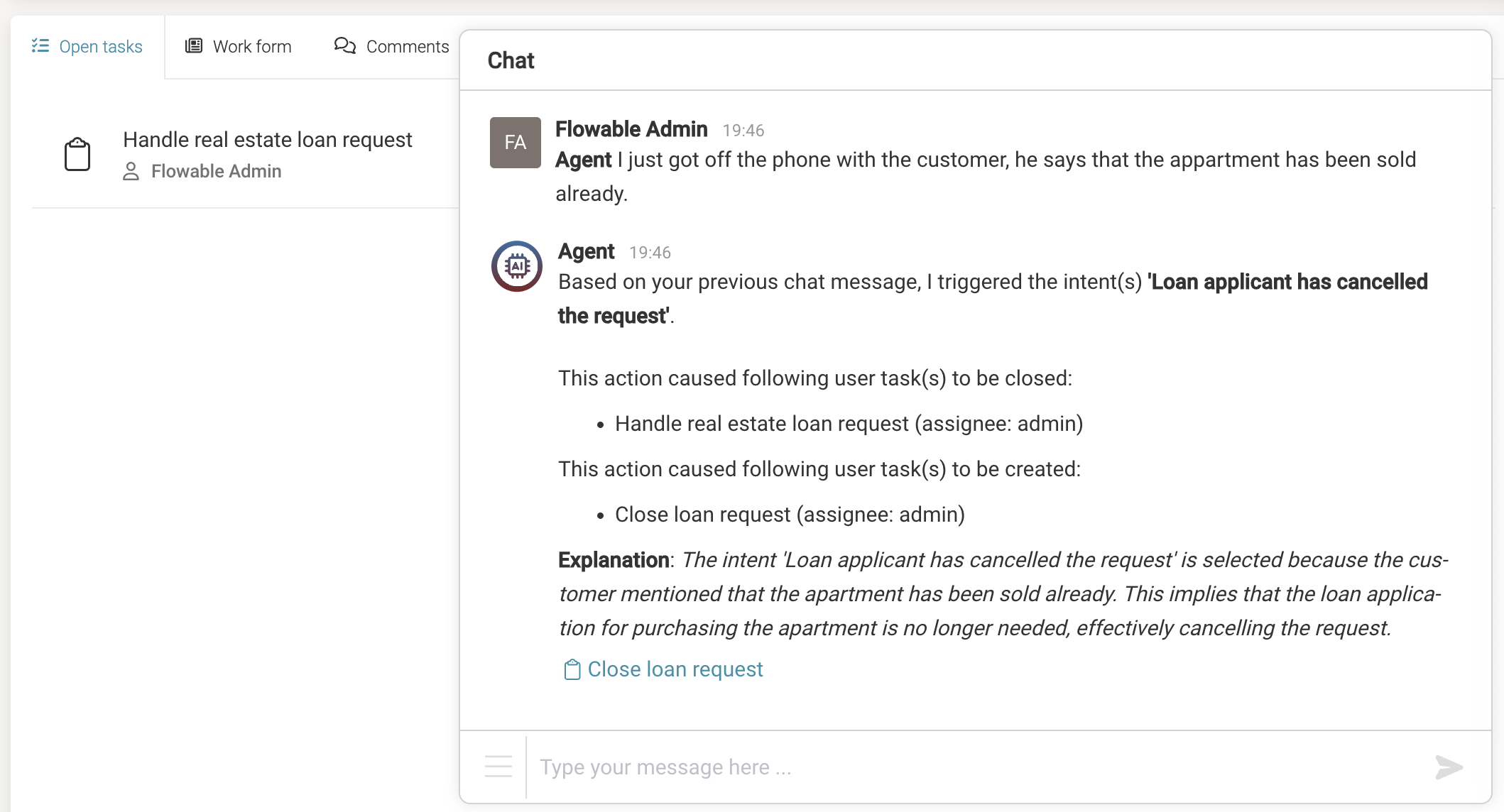

Add a message such as e.g. /agent I just got off the phone with the customer, he says that the appartment has been sold already.. Note that

Note that we're not explicitely saying the loan should be cancelled, but the message is definitely implying that. We can see that the natural languages capabilities of the agent can handle it without problem. Additionally, we get an overview of the consequences of the intent, with an overview of steps that were terminated and new ones that were activated.

Chat - Private & Public

With the rise of AI services, chat is quickly becoming a natural and powerful way to interact with AI-enabled systems. Rather than requiring users to navigate complex user interfaces, chat-based interaction allows users to simply ask questions or make requests in their own words. The system can provide direct answers or even render appropriate UI elements to assist the task at hand.

When building an orchestrator agent, enabling chat functionality is straightforward: simply check the “Enable Chat” option in the agent configuration. This allows the agent to respond conversationally within the context of the case instance.

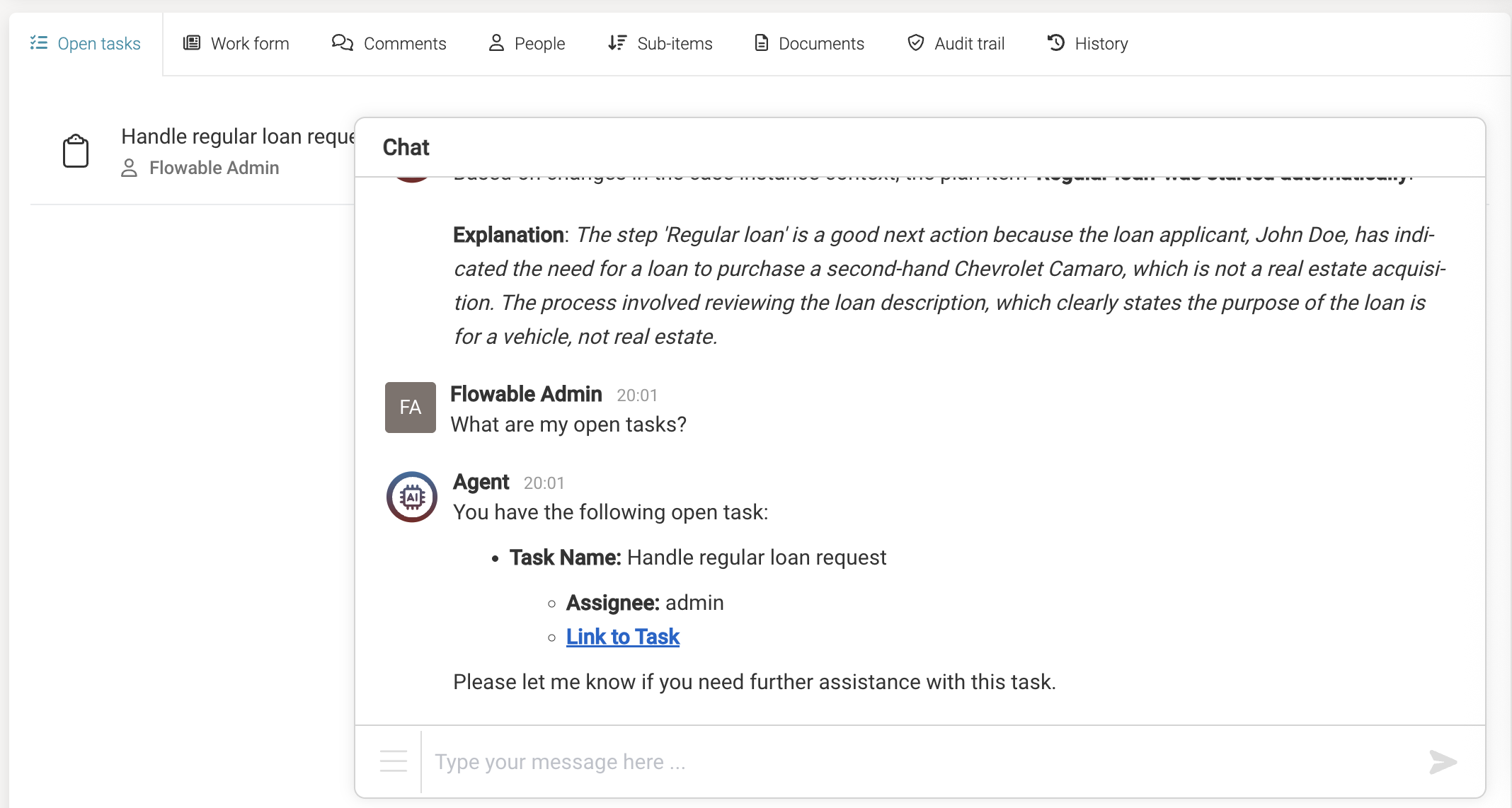

We have come across the use of the /agent slash command in chat before. It's important to understand the distinction between regular chat and this slash command:

- Regular chat is a private interaction between the user and the agent. It’s ideal for gathering contextual information, validating assumptions, or helping the user understand the current situation. No side effects are applied to the case itself. These messages are not visible to other users and do not trigger any agent logic beyond the conversational exchange.

- In contrast, using the /agent slash command puts the interaction in what we might call “turbo mode.” When this command is used, the system performs intent detection and AI evaluation. If applicable, this can lead to activating CMMN event listeners or even triggering tasks that impact the shared case instance. These changes are visible to all users of the case, which aligns with the nature of orchestration: any meaningful change should be shared and understood across all case participants.

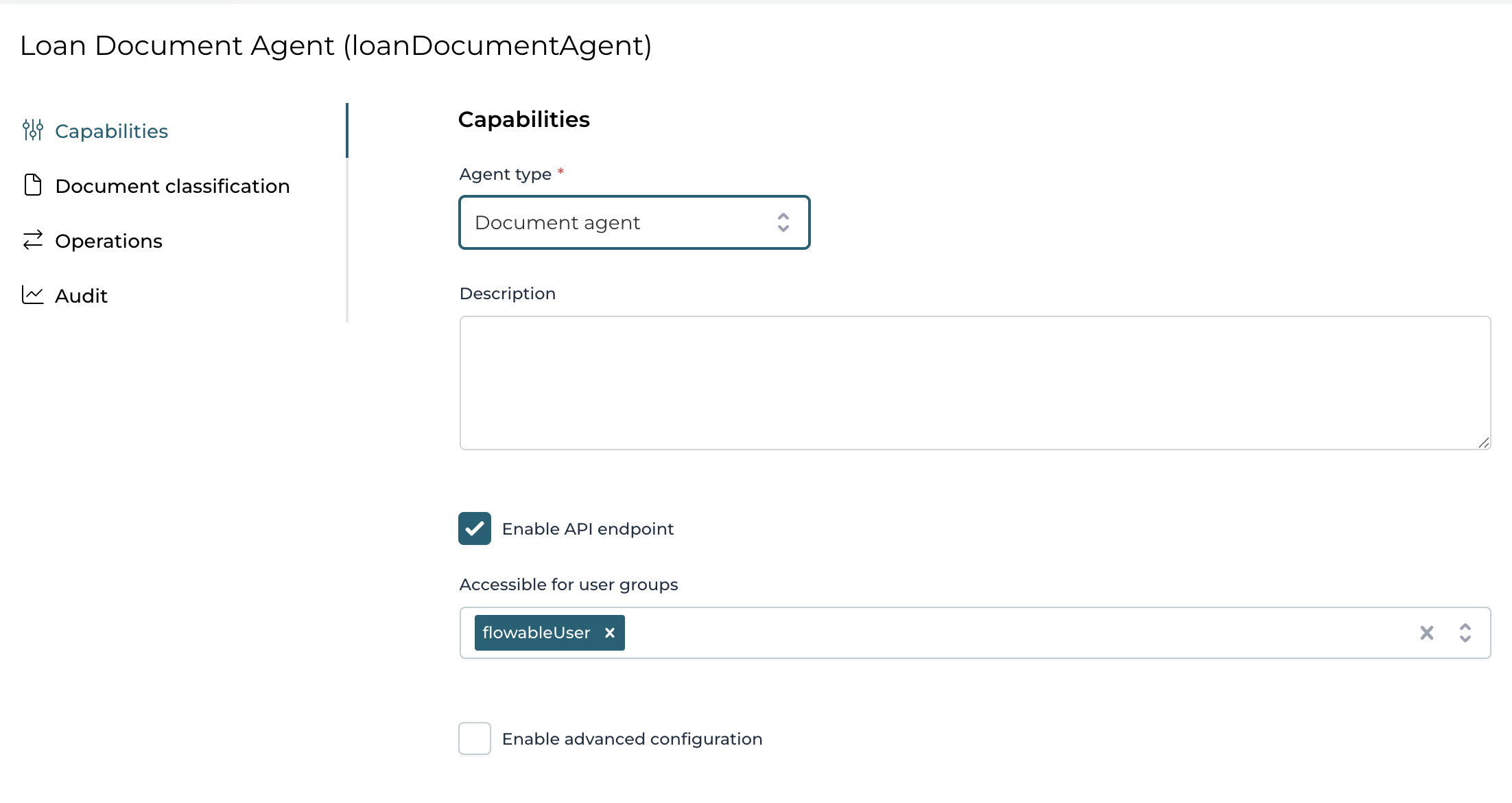

Document Agent

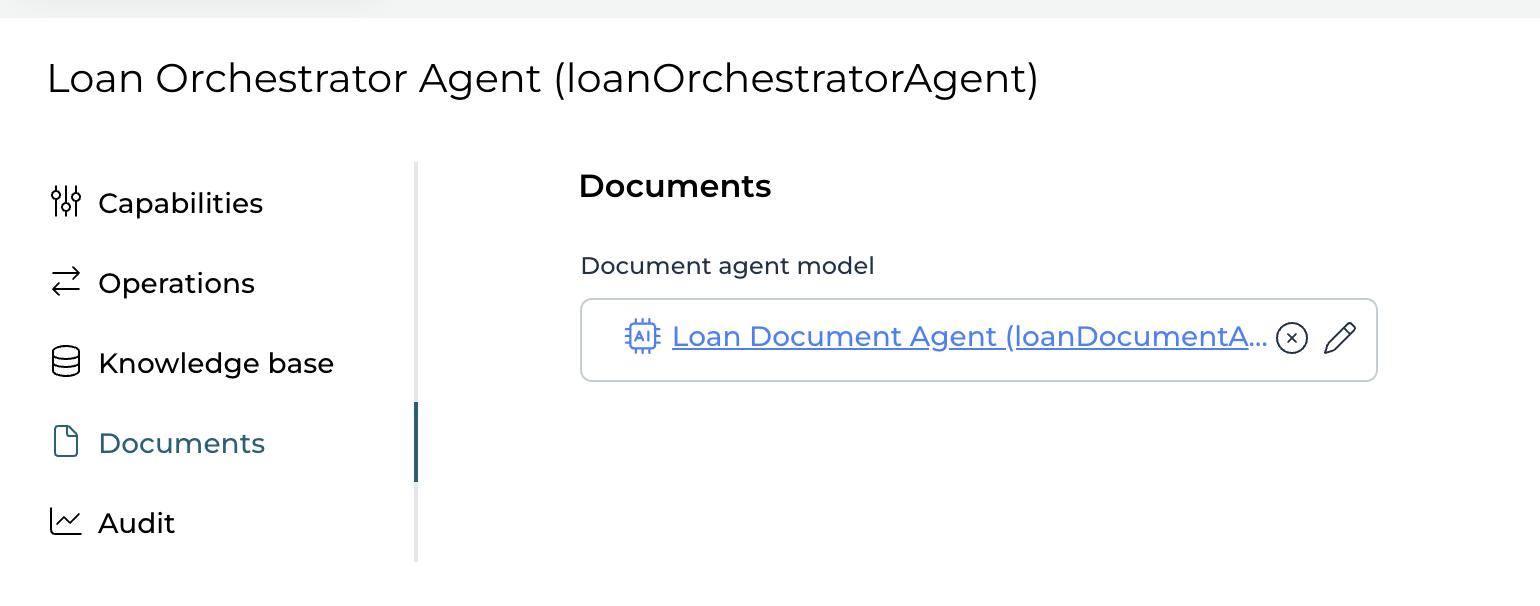

A powerful capability of many AI models is to work with unstructured data, i.e. content in its general form. Let's have a look at what this means by adding a document agent working under the orchestrator agent. To do so

- Go to the agent model

- Click on the

Documentstab - Click on the model reference component, which shows a popup to create a new document agent

- Give it a name like

Loan Document Agent - Click on

Createand then click on the new link to bring us to the document agent

This opens the agent model editor again, but now for the new agent.

- In the

Agent typedropdown, select Document agent. The tabs on the left-hand side now change. - Similar to the orchestrator agent, there is a default operation which will be used for data extraction.

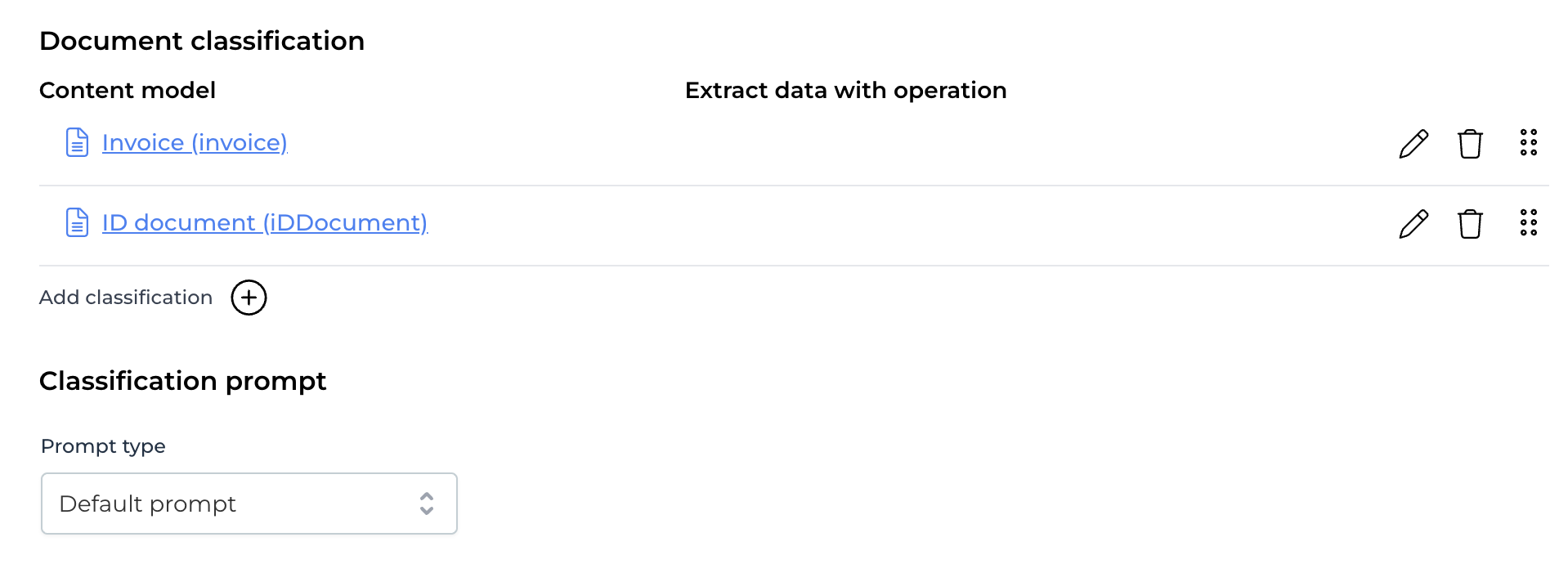

What we now would like to do is the ability to detect documents such as invoices, identification document, etc. when they're uploaded to the case instance. To do this, we need to configure the Document classification.

Each type of content we want to automatically detect needs a Content model. Create two content models, the usual way:

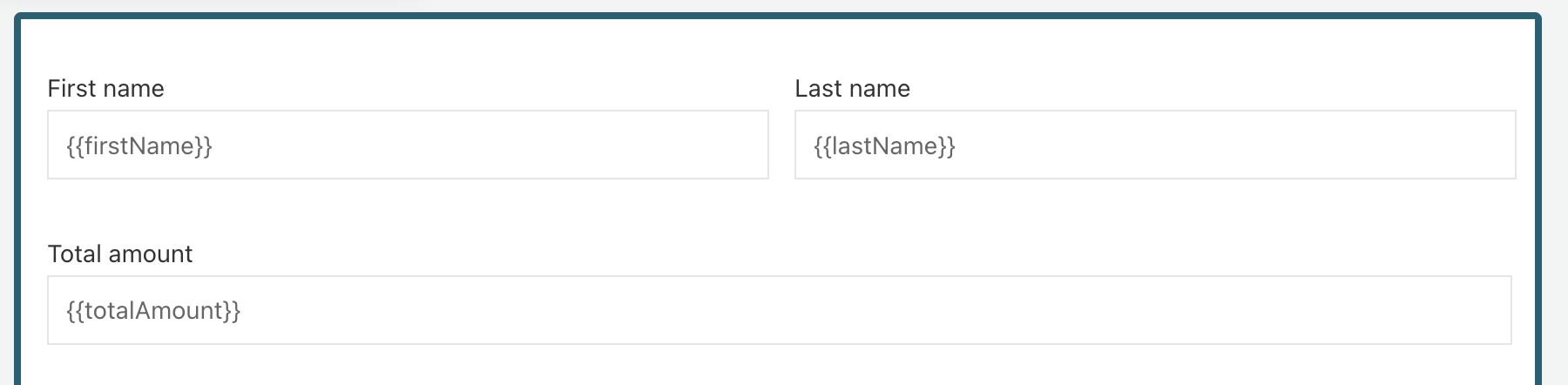

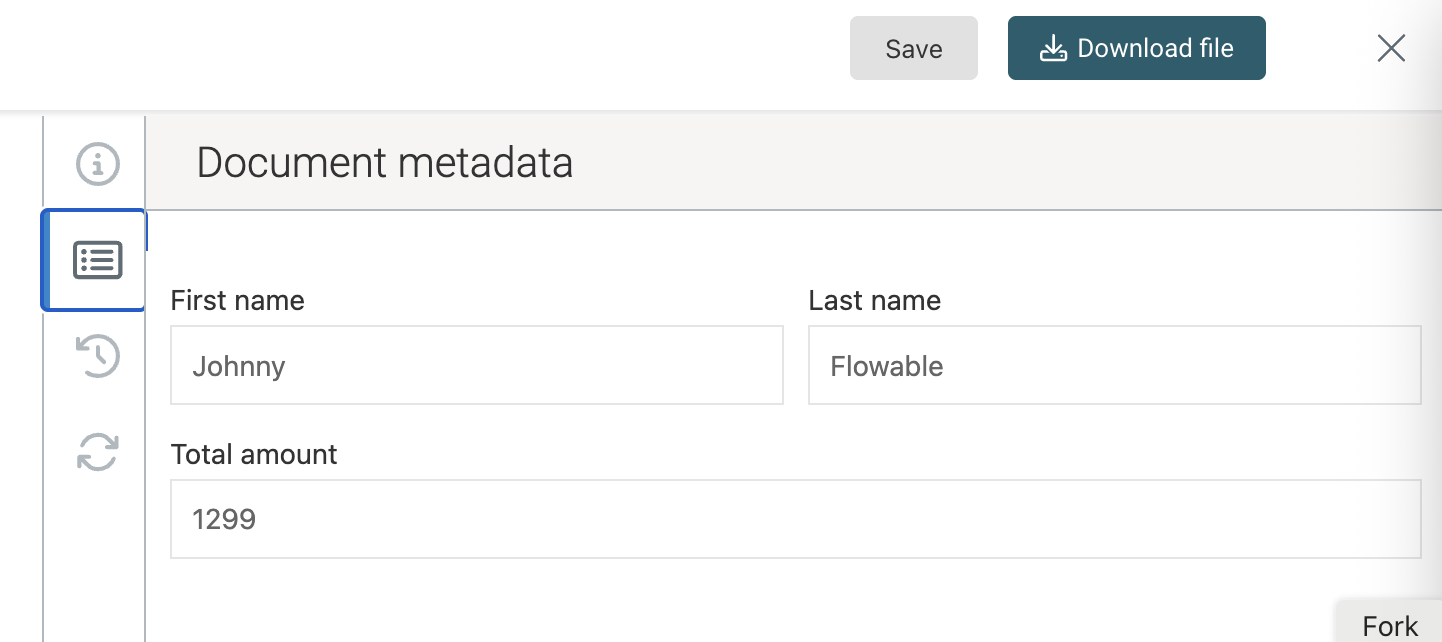

Now we could let the data be extracted from the content, but this wouldn't be very helpful in orchestration contexts. We'd need structure. This can by going into the content model, and adding a create form. The form will be used as structure to extract the data from the document. Let's keep it simple and add a few fields:

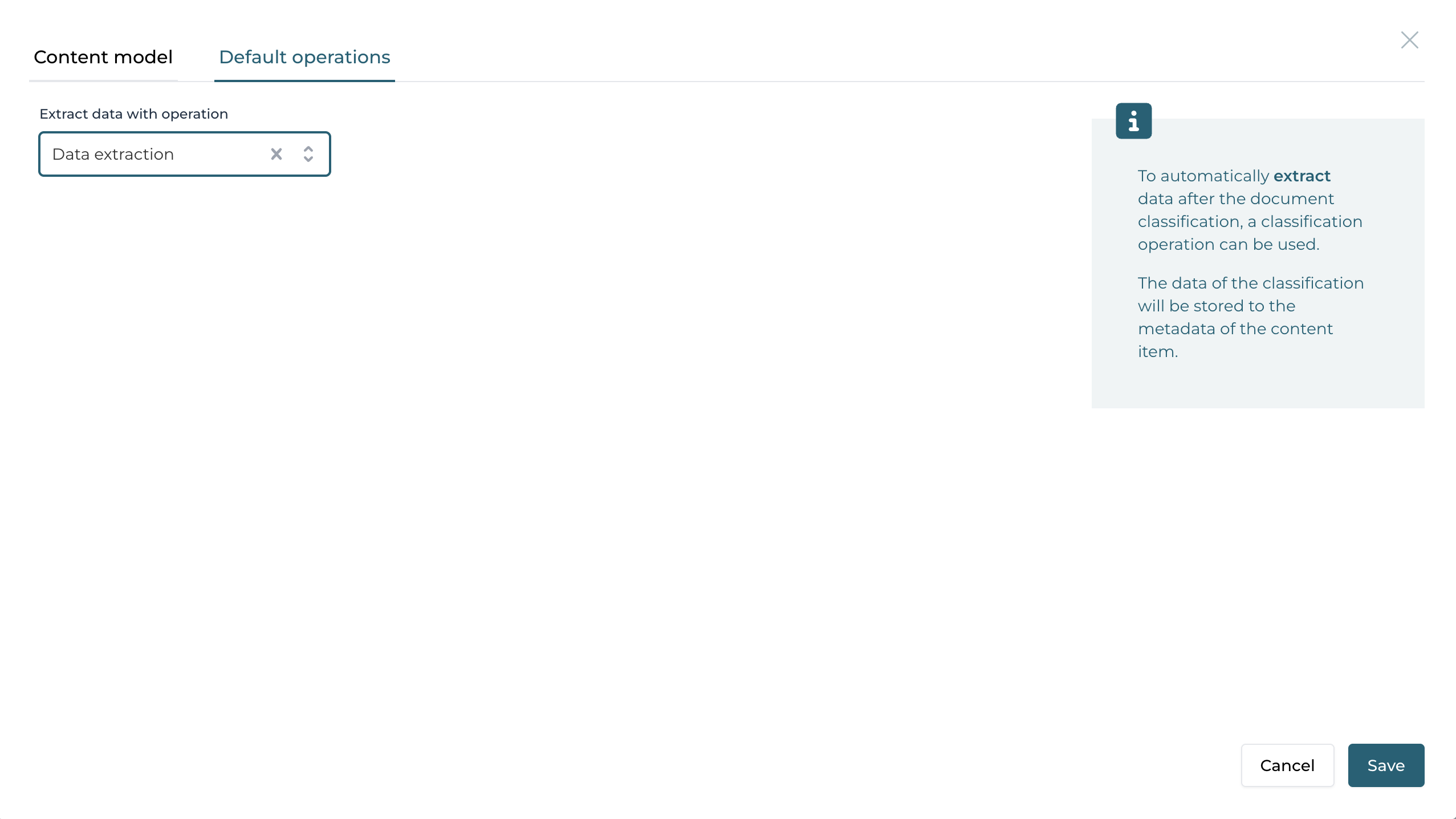

In the Document classification, make sure that Extract data with operation is set. If not, go to the operation and link it to the default data extraction operation:

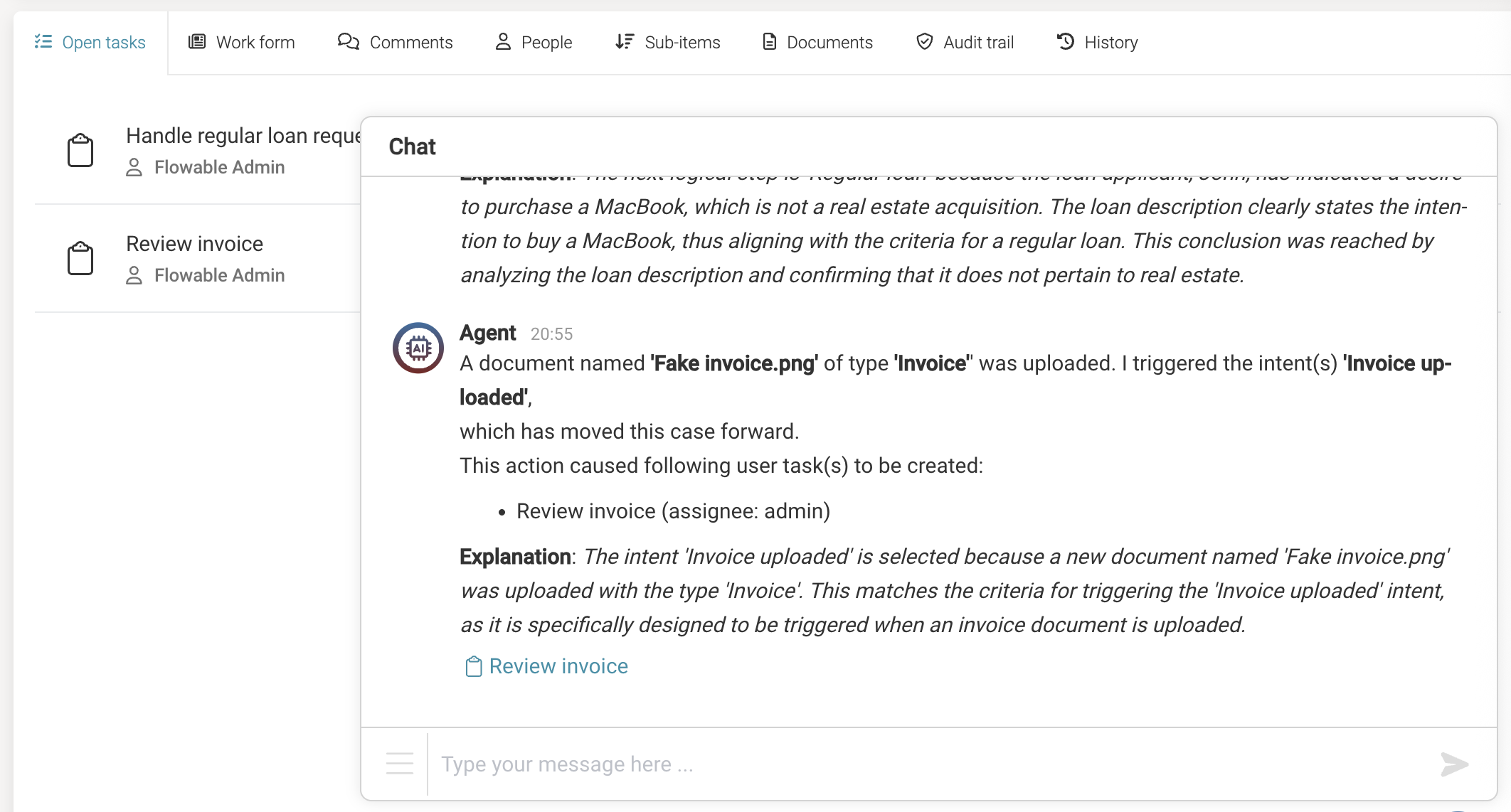

Let's tie it together and go back to the case model. There, add a new intent event listener with name Invoice uploaded within the 'Regular loan' stage. What we're saying here is that uploading an invoice is needed when handling a regular loan. Since normal CMMN applies here, this intent event listener will only be used when we're effectively in this stage. The case model should look like this:

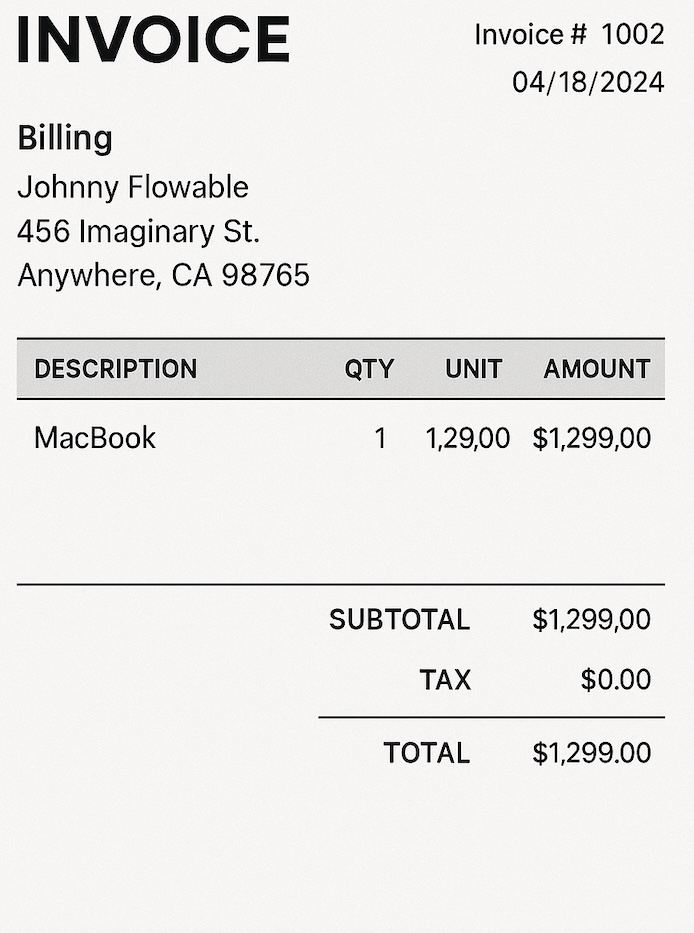

All of this sounds abstract, so let's see what it does at runtime. Publish the app, return to Work and start a case instance. Go to the documents tab, and upload an invoice, for example, the following fake invoice (also AI generated):

You can see that the data extraction, based on the form, has been executed in the background (click on the document and then the metadata tab):

Also in the chat, we can see the intent was triggered due to the incoming document:

Audit - Agent Exchanges

By default, every agent invocation is audited automatically. This ensures full traceability of how, when, and why an agent was triggered. This behavior can be adjusted in the agent model’s Audit tab if needed.

All input and output exchanged with the agent, including prompts, responses, and metadata, are stored as agent exchanges. These can be inspected via Flowable Control:

- Navigate to the Agent section.

- Locate the specific agent instance of interest.

- Open the Exchanges tab to review the complete history of interactions with the agent.

Wrapping up

In this guide, we explored the fundamentals of building and using an orchestrator agent within a case model. You’ve seen how this special agent type can drive intelligent automation by:

- Reacting to evolving context in long-running case instances.

- Detecting intents through natural language input or uploaded documents.

- Triggering AI-activated steps either automatically or as suggestions, depending on the use case.

- Coordinating with other agents, such as document or chat agents, to enrich the orchestration.

- Maintaining full control through enterprise-grade features like versioning, auditing, permissions, and observability.

You’ve also learned how the orchestration engine handles AI calls asynchronously and efficiently, without blocking system resources, while preserving traceability of every agent invocation.

This is of course just the beginning. For example, we could easily add a few utility agents to the mix to validate things for us, generate emails or any other reply to the customer, etc. The orchestrator agent opens up a powerful way to combine human workflows with AI intelligence, all while remaining fully aligned with enterprise automation patterns and governance.